Previously: Part 1

It’s a weird feeling, cruising around Silicon Valley in a car driven by no one. I am in the back seat of one of Google’s self-driving cars – a converted Lexus SUV with lasers, radar and low-res cameras strapped to the roof and fenders – as it manoeuvres the streets of Mountain View, California, not far from Google’s headquarters. I grew up about eight kilometres from here and remember riding around on these same streets on a Schwinn Sting-Ray. Now, I am riding an algorithm, you might say – a mathematical equation, which, written as computer code, controls the Lexus. The car does not feel dangerous, nor does it feel like it is being driven by a human. It rolls to a full stop at stop signs, veers too far away from a delivery van, taps the brakes for no apparent reason as we pass a line of parked cars.

I wonder if the flaw is in me, not the car: Is it reacting to something I can’t see? The car is capable of detecting the motion of a cat, or a car crossing the street hundreds of metres away in any direction, day or night (snow and fog can be another matter). “It sees much better than a human being,” Dmitri Dolgov, the lead software engineer for Google’s self-driving-car project, says proudly. He is sitting behind the wheel, his hands on his lap. Just in case.

As we stop at the intersection, waiting for a left turn, I glance over at a laptop in the passenger seat that provides a real-time look at how the car interprets its surroundings. On it, I see a gridlike world of colourful objects – cars, trucks, bicyclists, pedestrians – drifting by in a video-game-like tableau. Each sensor offers a different view – the lasers provide three-dimensional depth, the cameras identify road signs, turn signals, colours and lights. The computer in the back processes all this information in real time, gauging the speed of oncoming traffic, making a judgment about when it is OK to make a left turn. Waiting for the car to make that decision is a spooky moment. I am betting my life that one of the coders who worked on the algorithm for when it’s safe to make a left-hand turn in traffic had not had a fight with his girlfriend (or boyfriend) the night before and screwed up the code. This Lexus is, potentially, a killer robot. One flawed algorithm and I’m dead.

But this is not a bad movie about technology gone wild. Instead, the car waits until there is a generous gap in traffic, then lurches out a little too abruptly and executes the left-hand turn. I remark on the car’s sudden acceleration.

“Yeah, it still drives a little like a teenager,” Dolgov says. “We’re working on that.”

A laptop in the passenger seat provides a real-time look at how the car interprets its surroundings, depicting a gridlike world of colourful objects – cars, trucks, bicyclists, pedestrians – in motion up to hundreds of metres away in all directions, day or night.

No technology symbolises the progress – and the dangers – of smart machines like self-driving cars. Robots might help build the phone in your pocket and neural networks might write captions for your vacation pictures, but self-driving cars promise to change everything, from how you get from one place to another to how cities are built. They will save lives and reduce pollution. And they will replace the romance of the open road with the romance of riding around in what amounts to a big iPhone.

But the imminent arrival of self-driving cars also brings up serious questions about our relationship with technology that we have yet to resolve. How much control of our lives do we want to give over to machines – and to the corporations that build and operate them? How much risk are we willing to take in doing so? And not just physical risk, as in the case of self-driving cars, but economic risk too, as financial decisions become more and more engineered and controlled by smart machines. With self-driving cars, the invisible revolution that is behind everything from Facebook to robotic warfare invades everyday life, forcing a new kind of accounting with the technological genie.

The self-driving car is not just a research experiment. Already some Mercedes can essentially park themselves and adjust brakes to avoid collisions. Tesla recently released Autopilot, a new feature that keeps its cars between lanes on the highway, allowing near-hands-free driving, as well as a safe distance away from other vehicles in stop-and-go traffic. As for fully autonomous cars, Google is not the only one pushing this forward: Virtually every major car manufacturer has a program in development, including Toyota, which recently announced it is investing $1 billion in a new AI lab in Silicon Valley that will focus on, among other things, technology for self-driving cars. But the most aggressive newcomer is Uber, which raided the robotics department at Carnegie Mellon University, hiring away 40 researchers and scientists. Uber co-founder Travis Kalanick has made no secret that his goal is to cut costs by rolling out a fleet of driverless taxis. When I asked Chris Urmson, head of the Google self-driving-car project, how long it would be before fully autonomous cars will be on the road, he replied, “It’s my personal goal that my 12-year-old son won’t need to get a driver’s license.”

The arrival of self-driving cars is the confluence of many factors, including advances in machine learning that allow cars to “see”, the proliferation of cheap sensor technology, advances in mapping technology, and the success of electric cars like Tesla. But the biggest factor may be the sense that cars as we know them today are a 20th-century invention poorly suited to our 21st-century world, where everything from climate change to the declining wealth of the middle class is calling into question the need to have a throaty V-8 – and big corporations excel at building sexy devices to sell products and siphon off our personal data. As one Apple exec said, teasing rumours that the company was exploring the idea of getting into the auto business, self-driving cars are “the ultimate mobile device”.

I get back in my Hyundai rental after cruising around Mountain View in the Google car, and the first thing I notice is how lousy most human drivers are – pulling out of parking lots without looking, cutting off people during lane changes. I find myself thinking, “The Google car wouldn’t do that.” One study estimates that, by midcentury, self-driving cars could reduce traffic accidents by up to 90 per cent. “We really need to keep in mind how important [the adoption of autonomous vehicles] is going to be,” says Urmson. “We’re talking about [the lives of] 30,000 people in the U.S. – and 1.2 million worldwide. If you think about the opportunity cost of moving slowly, it’s terrifying.”

There are other potential benefits. Self-driving cars are likely to be electric, which will hasten the development of better batteries, reduce pollution and slow climate change. They are likely to be smaller, lighter, simpler – more like fancy gondola pods on wheels than your daddy’s Cadillac. Instead of owning one, you may just rent it for a while, summoning it up on your iPhone when you need it.

But the open road, celebrated by everyone from Jack Kerouac to Dennis Hopper, will be closed. (One roboticist speculates that rural states like Colorado might become “driving parks”, where humans are allowed to feel the freedom of taking the wheel again.) People can be killed when the computer makes a mistake and drives into a tree on a rainy night. By commandeering your vehicle, hackers will have the power of assassins. Without proper data security, your car could become a spy, telling your corporate masters about your every move.

How this will all play out is far from clear. Companies like Google and Uber are developing vehicles that are fully autonomous, no steering wheel needed – just program your destination into a map and away you go. Others, such as Tesla and Toyota, are taking a more incremental approach, betting that cars will take over more of the tedious tasks of driving, but leaving humans in control for the fun (and dangerous) stuff. “What’s clear,” says MIT professor David Mindell, author of Our Robots, Ourselves, a recent book about the revolution in smart machines, “is that the self-driving car is moving very quickly out of the lab and into the real world.”

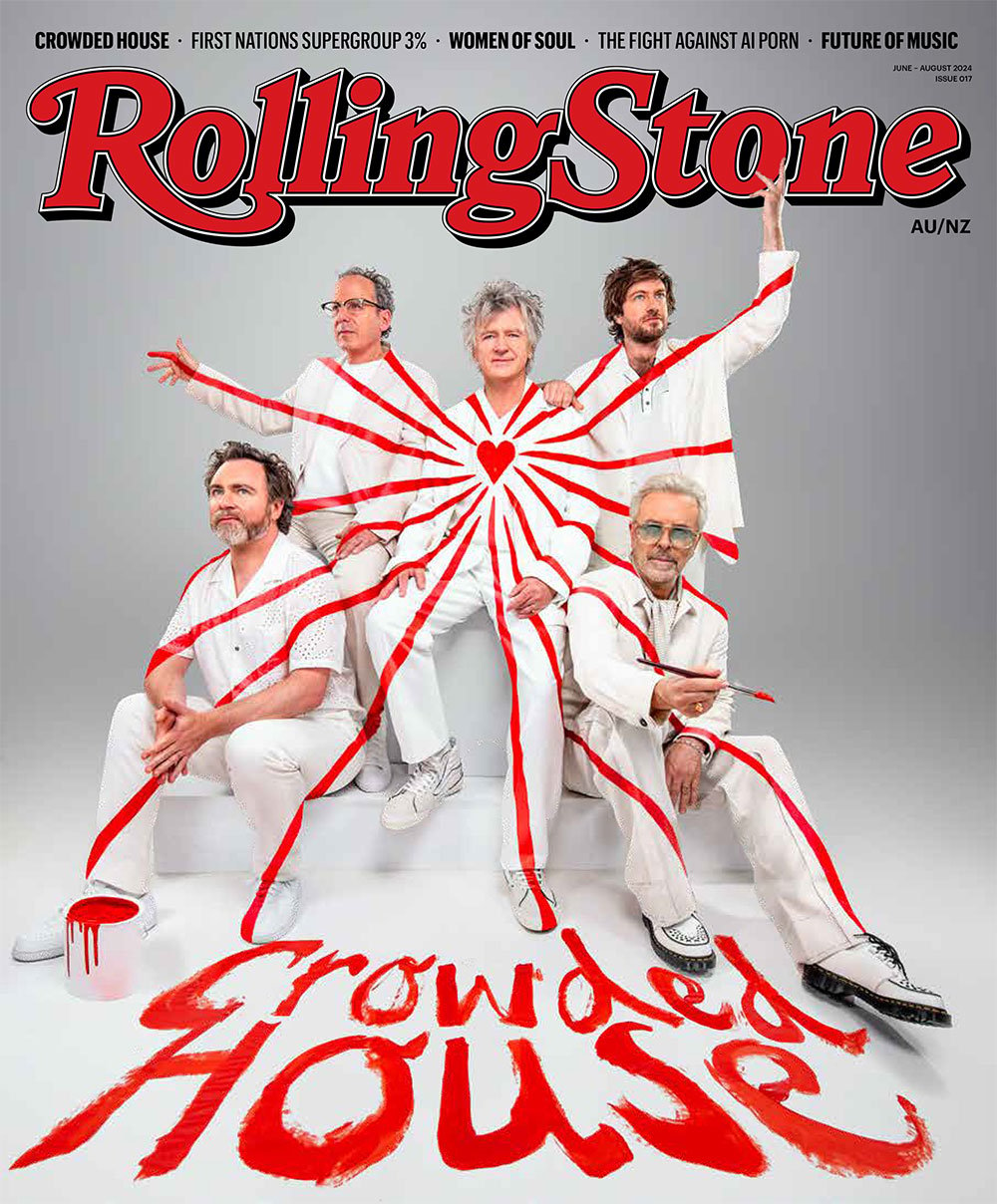

Google’s Chris Urmson and a self-driving-car prototype equipped with lasers, radar and cameras.

Chris Urmson lives less than three kilometres from his office at Google and usually rides his bike to work. At 41, he is a little bashful, not at all a Master of the Universe type. He wears an unfashionable blazer and rolls in a Mazda 5, a minivan that he likes because his boys can get out of the sliding doors easily in tight parking spots.

Urmson’s office, like the entire Google self-driving-car project, is located in the new X (formerly Google X) building, which is about three kilometres from the main Googleplex in Mountain View. X is Google’s semisecret innovation lab, run by scientist-entrepreneur Astro Teller and Google co-founder Sergey Brin. According to Teller, the goal of X is to develop “science-fiction-sounding solutions” to the world’s problems. The building, which is on the site of an old shopping mall, has a hip postindustrial vibe, with concrete floors, glass-walled conference rooms and a cafeteria serving local, mostly organic food. (The day I visited, Brin was wandering around in gym shorts and Crocs.) X has been home to experimental projects like Google Glass, the much-hyped headset, and Project Loon, which is constructing high-altitude balloons to provide Internet access in remote areas.

The self-driving car is the biggest star at X, as well as the project that’s closest to commercialisation. Of the 105 self-driving cars registered in California, 73 belong to Google. While Google did not invent the self-driving car, it can lay claim to having created the industry of self-driving cars – purchasing startups, hiring experts and developing mapping and navigation technologies. Unlike Apple, which is rumoured to be getting into the business of designing and building cars, Google has made it clear that it has no intention of getting into the car-manufacturing business. It wants to control the software inside, to be the operating system for robotic roadsters.

Urmson’s interest in self-driving cars was sparked in 2003, when Carnegie Mellon started building a car for the first DARPA Grand Challenge the following year. The Defense Advanced Research Projects Agency is the secretive research arm of the Pentagon that has played a role in developing everything from the Internet to the stealth technology used in military aircraft. DARPA hoped to spur breakthroughs in autonomous vehicles, which could be useful to the military. Fifteen vehicles entered the race, which took place over a 240-kilometre-long course in the California desert, for a $1 million first prize. The best any vehicle could do was 11.3 kilometres – and that vehicle got stuck and caught fire. The next year, the race was less pathetic: Five vehicles completed a 212-kilometre course, but took seven hours to do it. Carnegie Mellon’s cars finished second and third. Urmson, who was the director of technology for the team’s cars, says, “You could see how this technology was going to advance rapidly.”

Google started its self-driving-car initiative in 2009, in part as an outgrowth of its mapping and street-view project (once you have a map, it’s not a big leap to engineer a more detailed one to guide a self-driving car). Urmson arrived that year, working under Google engineer Sebastian Thrun (who subsequently left Google to co-found Udacity, an online education startup). Initially, the Google team outfitted Toyota Priuses with cameras, sensors and a revolving laser on the top of the car. It was an expensive retrofit – the laser itself was reportedly $75,000, roughly three times the cost of the Prius itself. Urmson and the crew spent the next few years experimenting with various designs and technologies. One of the biggest questions they had to wrestle with: What role, if any, should human beings have in the operation of the car? In other words, were they developing a fully autonomous vehicle, or just a vehicle to help people drive smarter?

Self-driving cars could reduce traffic accidents by up to 90 per cent. “The world’s cities could look quite different. You just order a pod to zoom you wherever you want to go.”

The project came to a crossroads in the autumn of 2012. According to Dolgov, test crews did a lot of highway driving, during which they set up the car to ask the driver to take over whenever it encountered a situation it wasn’t confident about. And what they discovered was not encouraging: “The humans were not paying as much attention as we would have liked them to,” Dolgov says. Instead, they were texting, chatting, daydreaming, whatever. “They were Google employees, and we trained them, and we emphasised how important it was for them to pay attention all the time, but it’s human nature,” he says. “What do you do if the car is asking you to take over because it has encountered a dangerous situation but the human is sleeping?”

So Urmson and his team decided full autonomy was the way to go. In 2013, they began developing a prototype vehicle without a steering wheel or pedals – essentially a pod on wheels. It’s a cute little city car, a cross between an old VW bug and a space capsule. It has a soft front bumper to dampen any impact (God forbid it hits a pedestrian) and a top speed of 25 mph. Dolgov: “In a way, it makes the system simpler, because [it’s] completely in control.”

Urmson argues that there are other benefits to developing cars that can drive themselves. “It changes the equation on who it helps,” he argues. Blind people, for example. Or the physically handicapped. “The opportunity to give them the ability to move around in cities the way we all take for granted seems like a really big deal,” he says. “And because this technology will be cost-effective, you’ll be able to offer personalised public transit for the cost that you can offer buses today.”

One thing Urmson doesn’t mention is that fully autonomous cars could benefit Google too. Google is an empire of personal data, which it then compiles, leverages and sells for a variety of insanely profitable purposes, including selling ads. Why wouldn’t it want to be the company you depend on to take you everywhere you want to go – and to sell you things along the way?

Right now, Urmson is focused on making Google cars “sufficiently paranoid” to react to the billions of freaky situations that happen to drivers in the real world. Google cars drive about 16,000 kilometres per week, either in the Mountain View area or in Austin or Kirkland, Washington, where the company recently began new street-driving programs. The cars have driven more than 1.5 million kilometres and pretty much have routine driving down pat, which for Google and others is only the beginning. “Now we have to do the hard part,” Gill Pratt, head of Toyota’s autonomous-car initiative, has said. “We have to figure out what to do when there is [no] map; what to do when the road differs from the map; what to do when the unexpected occurs – when the child chasing the ball runs in front of the car, or when somebody changes lanes very fast. These are situations where the dynamics are very hard.”

To see how the cars react in freaky situations, Google is testing them on a track, where people jump out of port-a-potties and throw beach balls at the bumper. But the problem is more complex than just reacting to a beach ball. Shortly after our drive around Mountain View, I ask Dolgov about a hypothetical situation: “Let’s say a Google car is driving down a two-way road and a bicyclist happens to be pedalling next to it. What happens if, just as you are passing the biker, a car that is coming the other way swerves into your lane? If the Google car had to choose between a head-on collision or moving over and hitting a bicyclist, what would the car do?”

“It will not hit the bicyclist,” Dolgov states flatly. “If we can give more room to the oncoming car and do the best we can to let it not hit us, we will do that and get on the brakes as hard as possible to minimise the impact.” This seems like a sound decision – but it’s easy to come up with scenarios where the right thing to do is not clear. What if the car had to choose between sideswiping a car that swerves into its lane or driving off a tree-lined road – do you really want to trust the car’s algorithms to make the right call? “Some of these choices are interesting philosophical questions,” he says. “So then you have to look at it through the lens of what’s happening on the roads today, where human drivers kill more than a million people worldwide.”

It’s true – in most situations, a self-driving car is much safer. They don’t drink, get road rage or text. But the technology is not, and will never be, flawless. Google cars have been involved in a number of fender benders over the years, but were never at fault. Then, last month, for the first time, a Google car caused a minor accident by changing lanes into a bus (no one was hurt). “First time a self-driving car kills someone, it’s going to be big news,” says Danny Shapiro, senior director of automotive at Nvidia, which makes the video-processing chip used in many self-driving cars. Even if they are safer, the issue of trusting your life to a machine – or, more specifically, to the computer code written by Google (or Apple, or Tesla) is a big leap. Who decides how much risk is written into the algorithms that control the car? In Our Robots, Ourselves, Mindell imagines a dial that says more risk (drive faster), less risk (drive slower). But who certifies the risk is accurate? If VW can cheat on emissions testing, why won’t it cheat on safety tests?

Questions like these are why robotics pioneer Rodney Brooks thinks that Google’s faith in fully autonomous cars is overstated. Not because the technology isn’t ready, but because when it comes down to it, people like having a human being on hand – especially when their lives are at stake. Brooks points to the history of trains, which have a vastly simpler control problem: “After years and years of having driverless trains in airports, people are only beginning to be OK with driverless trains in subways.” He points to a June 2009 crash on the Washington Metro, in which a computer-driven train plowed into another train, killing nine people and injuring about 80 others. After the crash, customers demanded that humans regain control of the trains. “When humans were driving performance dropped because a human driver wasn’t as good at pulling up at the mark, but people said, ‘No, you have to have a human in the loop’,” Brooks points out. It took $18 million in research and upgrades before the first computer-driven trains were back on the tracks.

Eric Horvitz, director of Microsoft Research’s main AI lab in Redmond, Washington, thinks it’s too early to say how all this will play out – but believes we’ll soon see at least one major city go completely into a fleet of public micro transit. “You can imagine cities looking quite different,” Horvitz says. “You get to the outskirts of Paris, you can’t take your car in there, but don’t worry, it’s all covered by very flexible micro transit. You just order up where you want to go, a pod stops, and it zooms you over to that place. Sounds pretty good to me.”

Tesla has one of the world’s most advanced auto-manufacturing plants, with robots and employees together churning out 1,000 cars a week.

If any company could be a poster child for how robots are transforming American manufacturing jobs, it’s Boeing. According to Reuters, Boeing is turning out 20 per cent more planes, but with one-third fewer workers, than it did in the 1990s. In just this past year, it replaced hundreds of employees with 60-ton robots. Boeing says they work twice as fast as people, with two-thirds fewer defects. The impact of automation on the company’s workforce has been dramatic. In 1998, the company made 564 planes a year, employing roughly 217 people per plane. In 2015, it made 762 planes, using about 109 workers per plane. Not surprisingly, a recent White House economic report concluded that labor productivity gains from robotics are similar in magnitude to the productivity gains from steam engines in the 19th century.

Few economists question the fact that smart machines will soon replace humans in a wide variety of jobs, from fast-food service to truck drivers. Almost 5 million manufacturing jobs disappeared between 2000 and 2015. Some of these jobs were sent overseas, but many were lost to increasing automation.

But the economic consequences of the robot invasion are not as simple as they might look. I saw this for myself, growing up in Silicon Valley in the 1970s. A friend’s father worked at the old GM plant in nearby Fremont. Working on the assembly line – he had burns on his arms from bits of flying metal – he was able to support his family. At its peak in the late 1970s, the plant employed 6,800 people.

Unfortunately, the cars they built sucked. In the 1970s and 1980s, GM vehicles were best known for terrible reliability. “One of the expressions was, ‘You can buy anything you want in the GM plant in Fremont’,” Jeffrey Liker, a professor at the University of Michigan, said on a 2010 episode of This American Life. “If you want sex, if you want drugs, if you want alcohol, if you want to gamble – it’s there… within that plant.”

Not long ago, I visited the old GM factory. It’s now owned by Tesla and has been transformed into one of the most advanced auto-manufacturing plants in the world. Giant rolls of raw aluminum enter the plant on one end, $100,000 electric cars roll out the other. “We’re building the future here,” says Gilbert Passin, the company’s vice president of manufacturing.

More than a thousand robots work on the assembly line. Some hang from the ceiling, others scoot along the floor delivering materials, and some giant bots, with names like Iceman, stand four-and-a-half metres tall and can lift a 450-plus-kilogram Tesla body like a toy. Although it is one of the most highly automated car-manufacturing plants in the world, Tesla builds only about 1,000 cars a week (GM used to churn out 1,000 a day). But here is what’s really surprising: Tesla employs an estimated 3,000 people. So not only does Tesla build a better car, it also requires roughly three times more human beings per car to do it than GM did. In this case, factory automation has not destroyed jobs – it has actually increased them.

What do these humans do? Some work assembly-line jobs that are too nuanced and complex for a robot, such as dashboard installation; others maintain the robots and the tool attachments that they use. “This plant is a collaboration of humans and robots,” says Passin. “The robots do what they are good at, and humans do what they are good at.” To put it another way, this is not an automated factory, but a sophisticated dance of humans and robots.

Yet there is no question advances in smart machines will have a big impact on jobs. Some of the lost jobs will be offset by the creation of new positions in high-tech fields; others will be gone for good. A 2013 study from Oxford University suggested that nearly half of existing jobs are at risk of automation in the next 20 years. “We are facing a paradigm shift, which will change the way that we live and work,” the authors of a recent Bank of America Merrill Lynch report have said. “The pace of disruptive technological innovation has gone from linear to parabolic in recent years.”

It’s not hard to see which jobs are in the robotic bull’s-eye: clerical positions, assembly-line work, burger-flipping. February’s White House economic report predicted that if a job pays less than $20 an hour, there’s an 83 per cent chance it will eventually be eliminated by automation. But beyond that, it’s hard to predict the impacts. Unemployment and stagnant wages among blue-collar and less-educated workers have already transformed American politics and fuelled the rise of Donald Trump – increasing automation will only accelerate these trends.

“There’s a lot of fear out there,” says Ken Goldberg, a roboticist at the University of California-Berkeley, where he heads a “People and Robots” initiative that hopes to find ways to enhance human-robot collaboration. “But there are things that robots are good at, and things that humans are good at. So we need to think about them in duality, because then you can find cases where the robot will let humans do things better than they could before.”

One example of human-robot collaboration that Goldberg is working on is surgical robots. They have been around for 15 years, but until now, they have not been much more than fancy mechanical arms, which doctors view through cameras, allowing them to perform minimally invasive surgery in a safe way. There’s no autonomy, although that may soon change. Surgery involves a lot of tedious subtasks – like sutures. “You’re paying this star surgeon to sit there and sew,” says Goldberg. “It’s not a good use of time.” Another tedious task is called “debridement” – picking out dead or cancerous tissue inside the body. “Here again, we think robotics could do that nicely,” he says. “We can scan with a sensor and find a tumour underneath the surface of the tissue, then cut and remove it. Will this put surgeons out of work? No. We are talking about building tools to make surgeons better, allowing them to focus on important tasks and letting the robot do the tedious work.”

Still, the economic transformation will be brutal for many less-skilled workers. The best way to tackle the big upheavals that are coming, Thrun has argued, is not by trying to rein in technology, but by improving education. “We are still living with an educational system that was developed in the 1800s and 1900s,” he told The Economist last year. His online university, Udacity, now has 4 million registered users worldwide and offers “nanodegrees” in computer-related fields. “We have a situation where the gap between well-skilled people and unskilled people is widening,” he said. “The mission I have to educate everybody is really an attempt to delay what AI will eventually do to us, because I honestly believe people should have a chance.”

According to an influential 2014 paper by the Center for a New American Security, the future of combat will be an “entirely new war-fighting regime in which unmanned and autonomous systems play central roles… U.S. defense leaders should begin to prepare now for this not-so-distant future – for war in the Robotic Age.” Think of swarms of small armed drones, created by 3-D printers, darkening the skies of a major city; of soldiers protected by Iron Man-like exoskeletons and equipped with neural implants to mainline targeting data from distant computers; of coastal waters haunted by unmanned submarines; of assassinations carried out by wasp-size drones. In this new age of war, expensive Cold War technology like fighter-bombers will be increasingly irrelevant, the military equivalent of mainframe computers in a world that has moved on to laptops and iPads.

This revolution in military technology seems to be driven by three factors. The first is risk reduction. Robots can go into situations where soldiers can’t, potentially saving the lives of troops on the ground. In Afghanistan and Iraq, there are more than 1,700 PackBots, toy-wagon-size robots that are controlled via a wireless connection and equipped with a remote-control arm that can be a gripper to detect land mines or a camera for surveillance. Newer military drones, such as the MQ-9 Reaper, already can take off, land and fly to designated points without human intervention. Risk reduction changes the political calculation of war too: Since robots don’t come home in caskets, use of smart machines allows military leaders to undertake difficult missions that would be unthinkable otherwise.

The second factor is money. The U.S. has an almost $600 billion annual defense budget, which is bigger than the next 10 countries of the world combined. The military will in total spend an estimated $1.5 trillion on the F-35 fighter jet, which has been in development for 15 years. In the age of ISIS, this kind of spending not only is hard to justify, but it also might be downright stupid. An F-35 is useless against a swarm of small armed drones or a suicidal terrorist in a shopping mall. And there’s the fact that humans are expensive to operate and maintain. “About one-third of the military budget is taken up by humans,” says Peter Singer, a senior fellow at New America and author of Ghost Fleet, a novel about the future of war. Recently, a U.S. Army general speculated that a brigade combat team would drop from 4,000 people to 3,000 in the coming years, with robots taking up a lot of the slack.

The third factor is the fear (some would say paranoia) of a rapidly rising China. Since the end of the Cold War, the U.S. has enjoyed unprecedented superiority over its rivals through precision-guided missiles and other technologies developed to outsmart the Soviets. But now the Chinese seem to be catching up, boosting defense spending rapidly. China’s new anti-ship missiles could make it too dangerous for U.S. aircraft carriers to operate in the western Pacific if conflict breaks out. The Chinese have also invested heavily in advanced weaponry, especially military drones, which they are manufacturing and selling to politically volatile nations like Nigeria and Iraq.

“Google and Amazon have better drones and know more about you than the CIA. What happens when our government looks to them for the latest in military defence technology?”

For the military, the question is where to push the deployment of smart machines, and how far. For some jobs, machine intervention is a no-brainer. “Taking off and landing from an aircraft carrier used to be some of the most dangerous aviation tasks in the military,” says Mary Cummings, who was one of the first female fighter pilots in the U.S. military and now runs a robotics lab at Duke University. Cummings, who had friends killed in crashes while attempting aircraft-carrier landings, is working on deeper human-machine collaboration, especially in situations fraught with anxiety, which can lead to deadly miscalculations. “Today, the auto pilot on an F-18 can take off and land from a carrier much better than any human,” she says. “It’s much safer.” At the other extreme, humans have remained firmly in the loop, even though they may not be technologically necessary. Although drones will soon be sophisticated enough to identify, target and kill a person from 40,000 feet, all drone attacks require a sign-off from officials in “the kill chain”, which ends with the president.

Many of the smart machines being developed for military purposes may best be understood as extensions of human capabilities (vision, hearing and, in some cases, trigger fingers). Carnegie Mellon has U.S. Army support for a project to build robotic snakes, with cameras attached, that could crawl close to enemy targets. Researchers at Harvard University, backed by DARPA, developed a “RoboBee” with a three-centimeter wingspan that can fly through open windows and potentially conduct covert surveillance. DARPA is also working on so-called vampire drones, which are made of materials that could “sublimate” – i.e., dissolve into gas – after the mission is complete. Some of the research DARPA is conducting is right out of a James Cameron movie: According to Annie Jacobsen, author of a history of DARPA called The Pentagon’s Brain, the agency has been working on an idea called “Augmented Cognition” for about 15 years. “Through Augmented Cognition programs, DARPA is creating human-machine biohybrids, or what we might call cyborgs,” Jacobsen writes. DARPA scientists are experimenting with neural implants in human brains, which could act as a pathway for rapid information exchange between brain and computer, opening up the possibility – however remote – of soldiers controlling battlefield robots not with a joystick, but by sending something like thoughts, encoded as electric impulses.

Still, it’s important to distinguish DARPA-funded sci-fi projects from what’s going on in the real world. Last June, I attended the finals for the DARPA Robotics Challenge near L.A. This competition, which offered a $2 million first prize, was launched by the Department of Defense to inspire innovation in robots that might be useful in the event of a disaster like Fukushima, where a machine could provide aid within a highly radioactive power plant.

The favourite to win the finals was Chimp, a 200-kilogram robot built and operated by a team from Carnegie Mellon. Chimp was an awesome sight to behold: 1.5 metres tall, with arms capable of curling 140 kilograms. “We designed a robot that’s not going to fall down,” one of Chimp’s software engineers boasted.

But in the world of robots, things don’t always go according to plan. The competition quickly turned into a crashing-robot comedy: One fallen bot spilled hydraulic fluid like alien blood; another’s head popped off. Even the mighty Chimp faceplanted when it tried to open a door. The future, it seems, is still a ways off.

Left: The robot Chimp in a Department of Defense contest. Right: Soldiers in exoskeletons might one day use neural implants to control robots.

When it comes to robots and war, the central question is not technological but philosophical: Will machines be given the power and authority to kill a human being? The idea of outsourcing that decision – and that power – to a machine is a horrifying notion. Last July, a Connecticut teenager sparked a heated debate by attaching a handgun to a simple drone, then uploading a video to YouTube (the video has more than 3.5 million views). Imagine the panic that will ensue when someone adds an iPhone to a store-bought drone equipped with a semi-automatic weapon, creating, in effect, a homemade remote-controlled assassination drone.

Of course, simple autonomous weapons are nothing new: Consider the land mine. It is the embodiment of a stupid autonomous weapon, because it explodes whether it is stepped on by an enemy soldier or a three-year-old. In the 1990s, American activist Jody Williams helped launch an international campaign against land mines that eventually led to a treaty signed by 162 countries banning the use, development or stockpiling of anti-personnel land mines. Williams won a Nobel Peace Prize for her efforts. Now, she is the figurehead for a new movement called the Campaign to Stop Killer Robots. With the help of 20 fellow Nobel laureates, she wants to ban fully autonomous weapons before they become a reality. “I find the very idea of killer robots more terrifying than nukes,” she told NBC last year from Geneva. “Where is humanity going if some people think it’s OK to cede the power of life and death of humans over to a machine?” The U.N. has been deliberating about possible international guidelines since 2014.

In the U.S., the Pentagon requires “appropriate levels of human judgment over the use of force”. But what is “appropriate”? In fact, there already is full autonomy in some weapons systems currently being built. The U.S. will soon deploy a long-range missile that has an autonomous system that can seek targets on its own. South Korea has developed an automated gun tower that can sense and fire at a human target within more than a kilometre-and-a-half in the dark.

At the robot competition in California, I talked with Ronald Arkin, a professor at Georgia Tech, who has written widely about robot ethics. “I’m not an advocate for Terminator-style weapons,” Arkin told me. “But I do think it’s a complex argument.” Arkin made the point that smarter weapons could lead to fewer civilian casualties. “Because they don’t have emotions, they are less likely to panic in a dangerous situation,” he said, “or make bad judgments because of what psychologists call the ‘fog of war’.” Case in point: Last October, after a U.S. AC-130 gunship mistakenly opened fire on a hospital in Kunduz, Afghanistan, killing at least 42 people, Army Gen. John F. Campbell called it “a tragic but avoidable accident caused primarily by human error”. Would an autonomous drone have made the same mistake?

“Right now, we fly around drones to kill people who are truly dreadful people,” says computer scientist and author Jaron Lanier. “But we’re setting up systems that can go around and search for people and kill them.” The question is, of course, what happens when the dreadful people get the drones and start hunting us? Will that happen? “Well, history teaches us that, yes, it will,” he says. “And when it does, instead of saying, ‘Oh, those evil machines’, what we should say is, ‘Wow, engineers were really irresponsible and stupid, and we weren’t thinking about the big picture.’ We should not be talking about [the evils of] AI, we should be talking about the responsibility of engineers to build systems that are sustainable and that won’t blow back. That’s the right conversation to have.”

Some researchers believe we may be able to program a crude sort of ethical code into machines, but that would require a level of sophistication and complexity we can only speculate about. Nevertheless, the arrival of more and more autonomous weaponry is probably inevitable. Unlike nuclear weapons, which require massive investments by nation-states to acquire, this new technology is being driven by commercial development. “I guarantee you, Google and Amazon will soon have much more surveillance capability with drones than the military,” says Cummings. “They have much bigger databases, much better facial recognition, much better ability to build and control drones. These companies know a lot more about you than the CIA. What happens when our governments are looking to corporations to provide them with the latest defence technology?”

But Cummings thinks the arrival of true killer robots is a long way off: It’s easier to dream up ideas about autonomous robots and weaponry in a lab than it is to deploy them in the real world. I saw this for myself shortly after the robot competition in California ended. I wandered back into the garage area, where the teams that had built and operated the robots were hanging out. It felt like a NASCAR garage, with bays of tool boxes and high-tech gear and team members drinking beers. Except instead of cars, it was robots. First prize was won by a small robot named DRC-Hubo, built by a team from South Korea, which led to jokes about the Pentagon arming our allies who happen to be neighbours with China.

As for the robots themselves, their moment was over. They hung from their harnesses, no more animate than a parked car. Even the mighty Chimp, its bright-red paint scuffed from its dramatic fall, looked feeble. Meanwhile, the engineers and programmers on the Carnegie Mellon team milled around, putting away their tools or staring at computer screens. It was a Wizard of Oz moment, the sudden glimpse behind the curtain when you see that an awesome machine like Chimp is a product of human sweat and ingenuity, and that without the dozens of people who worked for years to design and build it, the robot would not exist. The same can be said for the Google self-driving car or the CIA drones that assassinated Al Qaeda leaders in Pakistan and Yemen. Compared with a toaster, they are intelligent machines. But they are the product of human imagination and human engineering. If in the future, we design machines that challenge us, that hurt us, that inflict damage on things we love, or even try to exterminate us, it will be because we made them that way. Ultimately, the scary thing about the rise of intelligent machines is not that they could someday have a mind of their own, but they could someday have a mind that we humans – with all our flaws and complexity – design and build for them.

—

From issue #775, available now. Top photograph by Philip Toledano.