Last summer, a pornographic video of a prominent TikTok creator started going viral on social media. A screengrab of the video on TikTok made its way to the Instagram account @tiktokroom, along with a comment from the creator whose face was posted in the video. “It’s a deepfake but still, my parents on this app, chill,” the creator said. (Rolling Stone is not naming this creator or any other creator referenced in this story, on the grounds that the videos were posted without their consent.)

A deepfake is a digitally manipulated video that uses artificial-intelligence technology to swap the face of one person (often, but not always, a celebrity) onto another person’s body, usually without their consent. Much of the media focus on deepfakes has been on their potential to spread disinformation, particularly as it relates to a political campaign or election, as underscored by a doctored clip of Nancy Pelosi that went viral on Facebook last year. Yet the vast majority of deepfakes — 96 percent, according to 2019 research from the AI firm Sensity — are pornographic and used to target women.

In the past, deepfake technology was primarily used to create nude or sexually explicit images of female celebrities in particular. That’s in part because to actually build models for making deepfakes, you need thousands of photos or several minutes of video footage to do a good job, explains Giorgio Patrini, the founder and CEO of Sensity.

But the technology has gotten more accessible and less complex, with terrifying results. Last week, Sensity released a report about an AI bot on the encrypted messaging app Telegram being used to create nude or sexually explicit images of hundreds of thousands of women without their consent. Unlike other software used to create deepfake videos, the bot only required the use of one photo to depict the women nude, which has led to non-famous women and girls being increasingly targeted. So too have influencers on platforms like Instagram and TikTok. “It’s video-based as a platform, so it actually provides more material [to make deepfakes],” says Patrini. “These people are more exposed than if they were just uploading pictures.”

Danielle Citron, professor of law at the Boston University School of Law and vice president of Cyber Civil Rights Initiative, says the psychological impact of appearing in a pornographic deepfake video cannot be overestimated, and some have also compared nonconsensual pornography to a form of digital rape. “When you see a deepfake sex video of yourself, it feels viscerally like it’s your body. It’s an appropriation of your sexual identity without your permission. It feels like a terrible autonomy and body violation, and it rents space in your head,” she says. According to Patrini, appearing in a deepfake porn video can have a significant and deleterious financial impact on a creator’s business model, citing an example of a prominent YouTuber who lost a brand partnership after someone posted a deepfake video of her on a porn site.

To make matters worse, nearly a third of TikTok users are under the age of 14, according to internal company data reviewed by the New York Times, rendering this population extra vulnerable to the threat. As Rolling Stone previously reported, it is not uncommon for underage TikTok creators to find their videos posted in compilation videos on tube sites like Pornhub. Rolling Stone spoke with the mother of a then-17-year-old girl who found that one of her TikTok videos had been posted on Pornhub. “She was mortified. She didn’t want to go back to school,” the mother, who requested not to be named, told me. “She very innocently posted that video. She didn’t want to get involved with Pornhub. It’s not a lesson you should have to learn at 17.” (She says Pornhub took the video down immediately at their request. In an email to Rolling Stone, Pornhub denied that TikTok content was frequently posted on its platform.)

In our investigation for this story, Rolling Stone found more than two dozen examples of prominent TikTok creators being featured in deepfake porn. Most of them are originally posted on one of a handful of websites devoted exclusively to posting deepfakes, but they are also not difficult to find on social media platforms like Twitter and Reddit, even though both platforms have policies banning deepfakes.

In a statement, a Twitter spokesperson directed Rolling Stone to its policy prohibiting deepfakes, or any “intimate photos or videos of someone that were produced or distributed without their consent.” As of publication, it has not removed a tweet containing a deepfake pornographic video of two TikTok creators. Reddit, which also has a policy against posting nonconsensual deepfakes, did not immediately respond to request for comment.

They are also not difficult to find on Pornhub and other tube sites, even though Pornhub bans deepfakes as well. “They’ve basically just banned people from labeling deepfakes. People could still upload them and use a different name,” says former Sensity researcher Henry Ajder. He estimates that “you probably have somewhere in the low thousands” of deepfakes on Pornhub.

One of the most popular websites for deepfakes, which Rolling Stone is declining to name, had a Discord server with more than 100 members where users could request their favorite individual TikTok celebrities and influencers to be the subject of a deepfake. Although this website only publicly posts videos featuring creators over the age of 18, one of the most requested celebrities on this server is a popular TikTok creator who just turned 17. “Do [name redacted], by the way she turns 18 in 4 days,” one user recently said, requesting a creator. (The admin made the video and posted it on the website two weeks after she turned 18.)

When asked about the server, Discord said that it violated its policies regarding nonconsensual nudity and that it had taken it down. “Discord has a zero-tolerance approach to nonconsensual pornography on the service and the company takes immediate action when we become aware of it,” a company spokesperson said. A notice on the deepfake pornography website, however, says it is “working on an alternative” server.

As for why people create deepfake pornography in the first place, Citron says that some mistakenly believe it is essentially a victimless crime. “The harm is so far away from people that it seems like a game,” she says. “You can appropriate someone’s body and you don’t have to ask them.” The deepfake pornography phenomenon stems from a “culture of impunity,” or the idea that young men are entitled to do as they please with women’s bodies.

But even though some men may feel entitled to do what they want with influencers’ images, by virtue of the fact that they are public figures, at the end of the day being the subject of deepfake porn, Citron says, “feels like a virtual sexual assault.”

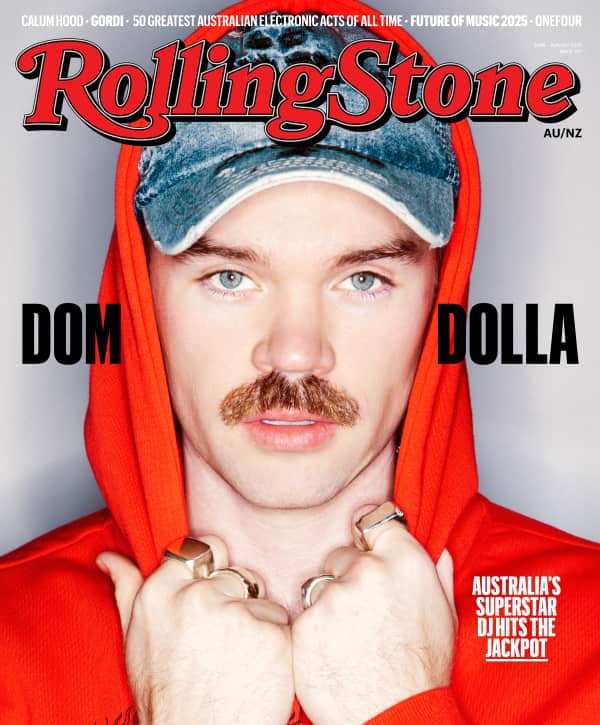

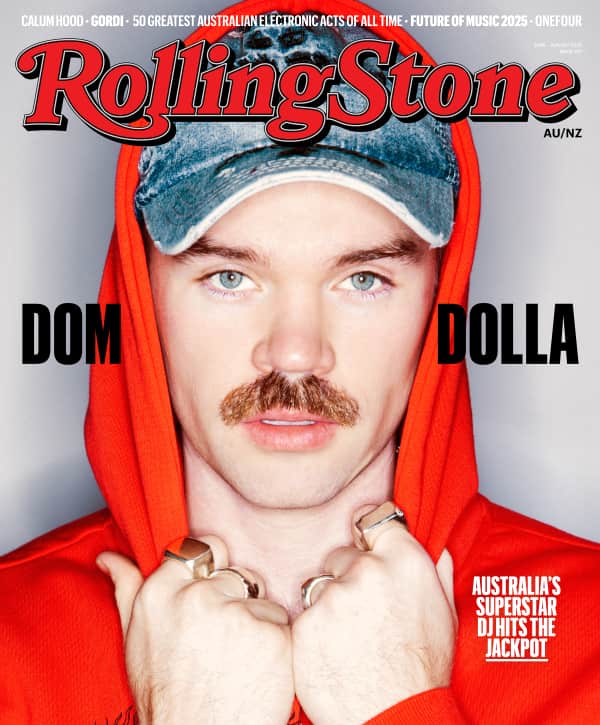

From Rolling Stone US