On Thursday, YouTube became the latest platform to crack down on QAnon, the baseless conspiracy theory promoting the idea of a deep state leftist cabal of pedophiles and child traffickers.

In a blog post, the company said that it would be “taking another step in our efforts to curb hate and harassment by removing more conspiracy theory content used to justify real-world violence.” It specifically cited QAnon as an example, noting that it had removed thousands of individual QAnon-themed videos and hundreds of QAnon-affiliated channels as a result of this policy update.

In announcing its policy update, YouTube has joined a slew of other platforms that have taken action against proponents of the conspiracy theory, including, most recently, Facebook, Pinterest, and the TikTok competitor Triller. “There’s a greater focus in the lead-up to the presidential election on having clear, accurate communication to ensure that we are able to have a safe, fair democratic election,” Kathleen Stansberry, associate professor of communications at Elon, tells Rolling Stone. “There’s a lot of concern that widespread misinformation is an increasing problem and that needs to be addressed both by users and platform level.”

QAnon has also been linked to episodes of real-world violence, such as the 2019 murder of a Staten Island mob boss, a 2018 incident with an armored vehicle at the Hoover Dam, and the kidnapping of multiple children across the country this year. Such incidents have likely played a role in putting pressure on platforms to update or clarify their content guidelines to scrub their QAnon presence, Stansberry says.

Following widespread de-platforming by social media websites, many QAnon believers have taken to alternative platforms like Parler and MeWe, which cater to conspiracy theorists and far-right extremists by offering much more lax content guidelines.

From the very start of the QAnon movement in 2017, YouTube has played an active part in the community, particularly for those new to the movement. “YouTube has played a major role in radicalizing Q believers, with popular Q videos like Fall of the Cabal and Out of Shadows often serving as an introduction to the movement,” author and QAnon researcher Mike Rothschild tells Rolling Stone.

Although platforms like Facebook have often served as entry points for newcomers to the community, YouTube’s role as a “storytelling platform” has served a particularly powerful function for QAnon, Stansberry says. “As with many conspiracy theories, there’s rich lore attached to some of the structure of QAnon,” she says. “It’s almost like the plot of a movie or an immersive video game. You become very sucked into the story.” YouTube is a “very effective platform” for the dissemination of such narratives, she says.

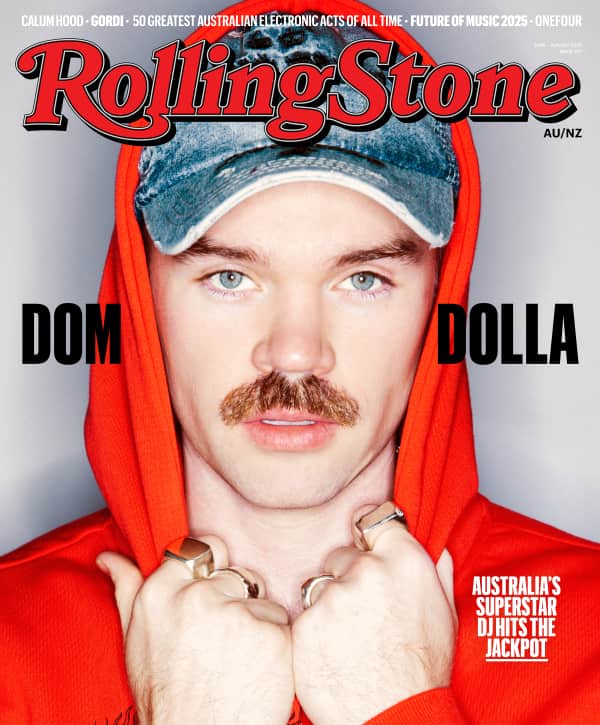

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

Rothschild says that, like many other sites’ de-platforming efforts, YouTube’s latest move likely won’t scrub QAnon from the web entirely. “YouTube banning Q content is a welcome and overdue step in de-platforming Q, but I’d caution against looking at is as a death blow for the conspiracy,” she says. “Q promoters excel at ban evasion, and social media platforms are often slow to follow up on their promises of large scale bans.”

Yet forcing believers off YouTube will likely reduce other people’s exposure to dangerous QAnon-related ideas, thus reducing the likelihood of them becoming radicalized, Stansberry says. “We often think of conspiracy theories like this as hydras — the more you cut off heads, the more heads grow,” she says. “But if you can cut off the source of attention and cut off access to eyeballs and people, then it becomes weaker.”

From Rolling Stone US