In the lead-up to Election Night, most coverage has focused on how platforms like Facebook and Twitter are working to prevent election-related misinformation and disinformation, in an effort to avoid a repeat of what happened in 2016. One platform, however, has largely avoided scrutiny, despite already serving as host to many easily refuted claims: TikTok.

“I’m really worried about TikTok,” says Angelo Carusone, the CEO of Media Matters, noting that TikTok has an opportunity to serve as an “accelerant” for emotionally charged misinformation or calls to violence. “You have a moment of tension and chaos, so if you see a few TikTok videos that go viral they can be mainlined into the zeitgeist,” he says.

Part of the reason why TikTok may be particularly well-suited to hosting such content is because TikTok videos tend to go viral on outside platforms, such as Twitter; in the right-wing ecosystem, they are also frequently shared on Telegram threads. “When TikTok videos proliferate at a major scale, they can have a major moment,” says Carusone, citing the Wayfair child sex trafficking rumors, which initially germinated on TikTok before proliferating throughout social media as a whole, as an example.

One popular trend involves TikTok users posting footage of boarded-up shops in large metropolitan areas, such as downtown Los Angeles or New York City, with the theme music from The Purge blaring in the background to underscore its apocalyptic connotations. Devoid of context, these videos have been shared widely by Trump supporters on Twitter and on various Telegram threads, as evidence of the supposed onslaught of violence planned by far-left groups in the wake of the election.

Many of these videos, however, are by Trump supporters urging the right to prepare for the prospect of mass violence committed by the far left if Trump wins the election. One video that was posted Nov. 1 makes the false claim that antifa is planning to commit violence while masquerading as Trump supporters, including a flier urging far-left protesters to “wear MAGA hats, USA flags, 3%er insignia, a convincing police uniform is even better! This way police and patriots responding to us won’t know who their enemies are.”

Though the flier actually originates from a far-right conspiracy theory from 2017, and has been debunked numerous times, the TikTok managed to go viral on the platform as well as on Facebook and Twitter, where a screengrab from the video garnered nearly three thousand retweets. Though the TikTok appears to have been removed, it continues to be duetted by other Trump supporters on the app.

Some videos on TikTok appear to be well-meaning, but do their own part to foment fear and anxiety nonetheless. For example, one TikTok with more than 33,000 views appeals to people of color and LGBTQ people by begging them to stay inside during the election and a few days after. The TikTok includes a viral tweet making this claim based on “leaked screenshots” from far-right groups, despite not including any of these screenshots. “This is fucking terrifying, and I’m so sorry if you’re living in America and you’re a minority right now,” the woman in the video says. Another video making similar claims has been viewed on TikTok more than 365,000 times.

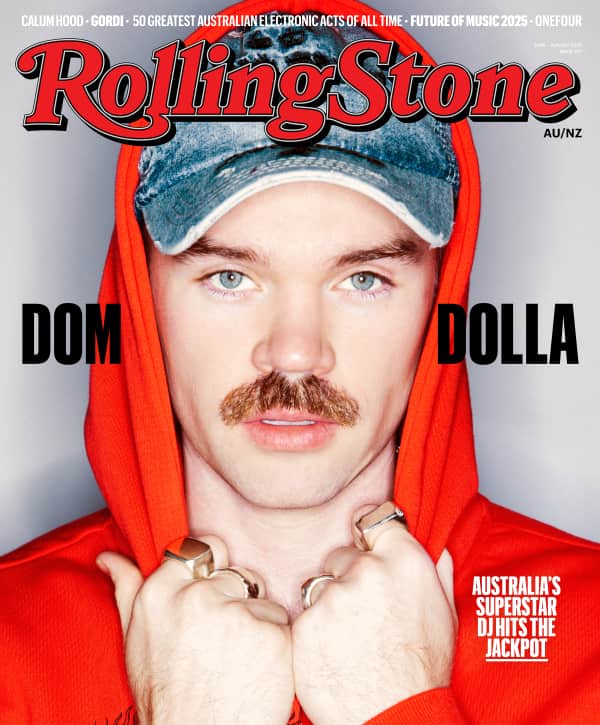

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

Compared to many of the other platforms, TikTok has a fairly stringent policy regarding misinformation. The platform has partnered with fact-checkers to remove misinformation or limit its reach, though it does not label it as such. Its community guidelines specifically prohibit “misleading posts about elections or other civic processes.” As TikTok general manager Vanessa Pappas said last August in a post on the company’s website: “Fact-checking helps confirm that we remove verified misinformation and reduce mistakes in the content moderation process.”

In a statement, a TikTok spokesperson said, “We do not tolerate content or accounts that seek to incite violence. We remove such content and redirect associated searches and hashtags to our Community Guidelines.” Some of the videos flagged by Rolling Stone appear to have since been removed.

In the past, TikTok has taken rigorous action to curb misinformation, such as going out of its way to ban content and accounts promoting the QAnon conspiracy theory last month, a move that Carusone praised. Yet because the app is primarily known for hosting dances and goofy trends, it is largely discounted as a potential vector of misinformation. “It’s much more of a vanguard platform than people fully appreciate and recognize as a whole,” he says.

Update Nov. 3, 9:43 p.m. This post has been updated with comment from TikTok.

From Rolling Stone US