People only pay attention to content moderation when it goes wrong. On Easter Sunday, Steve Stephens jumped out of his car and shot a 74 year old man, killing him. They had never met, but Stephens filmed the murder on his phone, and posted it to Facebook. In late April a father killed his 11-month-old daughter and livestreamed it before hanging himself. Six days later, Naika Venant, a 14-year-old who lived in a foster home, tied a scarf to a shower’s glass doorframe and hung herself. She streamed the whole suicide in real time on Facebook Live. Then in early May, a Georgia teenager took pills and placed a bag over her head in a suicide attempt. She livestreamed the attempt on Facebook and survived only because viewers watching the event unfold called police, allowing them to arrive before she died.

These things shock and scare everyday people who regularly use the Internet – but it’s what an estimated 150,000 people look at everyday for hours as their job.

Content moderation is the practice of removing offensive material or material that violates the terms of use for Internet service providers and social networking sites such as Microsoft, Google, Facebook and Twitter. Performed by human moderators, in conjunction with some algorithms, the job requires people to review the content before it can be deleted or reported. It exists in the background of the tech world, but is of critical importance to providing the safe and clean interface people expect when they’re online. Few are aware of the amount of human labor that goes into making their online experience as seamless as possible, and even fewer think about the toll it takes on the people that do it.

The term “content moderation” is a fairly sterile name to describe what the content in violation of the terms of use actually is at times. While the majority of content that is flagged is innocuous – photos of friends in risqué halloween costumes, or pictures from a party where people are drinking – other content that is reviewed ranges from child pornography, rape and torture to bestiality, beheadings and videos of extreme violence. Even if this only comprises a tiny fraction of the content online, consider the vast amount of content that is posted on a daily basis. According to the social media intelligence firm Brandwatch, there are about 3.2 billion images shared each day. On Youtube there are 300 hours of video uploaded every minute. On Twitter, 500 million tweets are sent each day, which amounts to about 6,000 Tweets each second. If two percent of the images uploaded daily are inappropriate, that means that on any given day, there may 64 million posts that violate a terms of service agreement alone.

“There was literally nothing enjoyable about the job. You’d go into work at 9 a.m. every morning, turn on your computer and watch someone have their head cut off,” one Facebook moderator told the Guardian earlier this year. “Every day, every minute, that’s what you see. Heads being cut off.”

“Every day, every minute, that’s what you see,” said one Facebook moderator. “Heads being cut off.”

Henry Soto first moved from Texas to Washington in 2005 so his wife Sara could take a job at Microsoft. He never thought the end result might be that by 2015 he’d have problems spending time with his young son. Not because of his son had problems moving to a new place or any of the other trials of raising a child, but because just seeing his son would trigger terrible images in Soto’s head. According to a complaint filed with the Washington State courts, this was because of images that he’d seen while watching and reviewing hours of content, much of it involving child rape and murder. So In 2016, Soto and his co-plaintiff Greg Blauert, who worked with Soto in content moderation, filed a lawsuit against Microsoft. The complaint for damages filed by their lawyers detail a horrifying reality, where Blauert and Soto spent hours each day reviewing graphic content, without adequate psychological support.

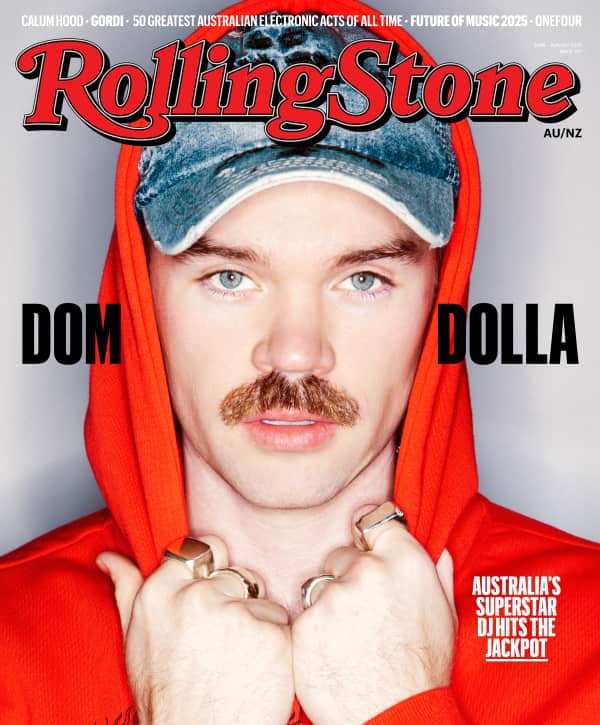

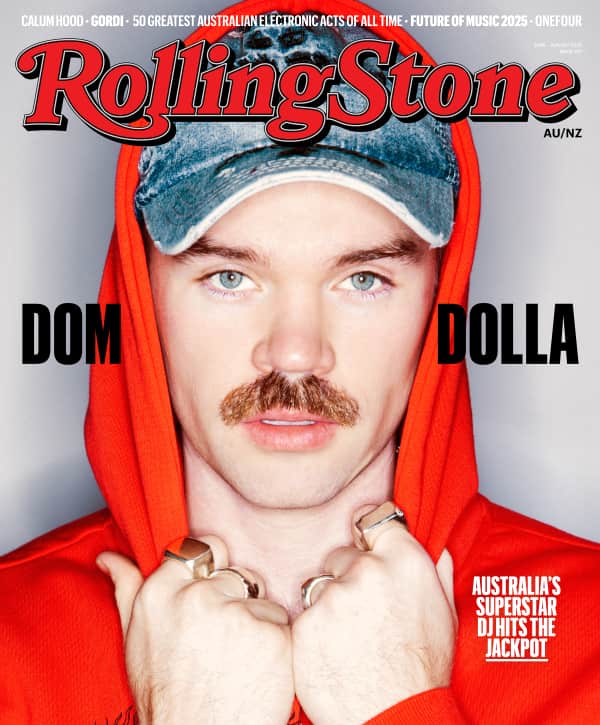

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

According to the lawsuit, Soto worked in the customer service department, where he ran the call center operations and repair problems related to MSN.com, before he was “involuntarily transferred” to the Online Safety department in 2008. According to the complaint, neither Soto nor Blauert were “warned about the likely dangerous impact of reviewing the depictions nor were they warned they may become so concerned with the welfare of the children, they would not appreciate the harm the toxic images would cause them and their families.”

“He was initially told that he was going to be moderating terms of use, that’s all,” says Ben Wells, the attorney representing Soto in the lawsuit. “And then he started out and quickly learned that this was horrible content. While he became extremely good at what he did, and had good employment reviews, by 2010 he was diagnosed with PTSD.”

Soto declined interview requests through Wells, citing his PTSD. Blauert’s attorney did not respond to interview requests.

In 2014, after years of reviewing content – which Wells says was largely child pornography – Soto saw a video of a young girl who was raped and killed, which turned out to be the last straw.

“Triggers turn on these videos in your brain, and then you watch them again and again and again,” says Wells, describing how seeing bad one image after viewing many could push someone over the edge and make living their daily lives a continuous PTSD trigger. You could just be walking by a playground where kids are playing, or you could see a knife in your kitchen, and the images you’d seen would play again in your head. Indeed, this is what Wells says happened to Soto.

These issues affected Soto’s family as well. Sometimes Soto can’t be around his young son, and his son can’t have friends over because of what seeing them might trigger in Soto. Soto is hard to be around when grappling with these issues. As the lawsuit states, his symptoms include “the inability to be around computers or young children, including, at times, his own son, because it would trigger memories or horrible violent acts against children he had witnessed.” The lawsuit also lays out that Soto has a startle reflex, spaces out, has nightmares and has had auditory and visual hallucinations.

Yet Wells says this is only a snapshot of the effects, ones that most people can’t even imagine.

As a result of the job, the lawsuit alleged, Soto was unable “to be around computers or young children, including, at times, his own son.”

Microsoft vehemently denies these claims, specifically that Soto didn’t receive adequate psychological support. A spokesperson for Microsoft tells Rolling Stone that the company takes seriously both its responsibility to remove imagery of child sexual exploitation being shared on its services, as well as the health of the employees who do this important work. They were unable to comment on specific questions regarding the lawsuit, as the legal process is ongoing. “The health and safety of our employees who do this difficult work is a top priority,” says the representative. “Microsoft works with the input of our employees, mental health professionals, and the latest research on robust wellness and resilience programs to ensure those who handle this material have the resources and support they need, including an individual wellness plan. We view it as a process, always learning and applying the newest research about what we can do to help support our employees even more.”

These days, Hemanshu Nigam is the CEO and Founder of SSP Blue, an advisory firm for online safety, security and privacy challenges. But from 2002 to 2006, he worked at the senior levels of Microsoft. Nigam questions the validity of Soto’s claims in the lawsuit as well; specifically the allegation that Microsoft lacked a suitable psychological support program. “Microsoft was one of the first companies that started focusing on wellness programs, before the rest of the industry even had a notion that they should be looking at it,” he says. Microsoft says the program has been in place since 2008.

Yet Microsoft has outsourced the lower levels of content moderation to the Philippines, for low pay and very little of the psychological support needed to do this work, according to Wired. (Microsoft declined to comment on whether it continues this practice.) Wells says Soto was was even sent over to the Philippines to monitor Microsoft’s program there and raised the issue that moderators lacked the support and pay they both needed and deserved, given the nature of the work.

Sarah T. Roberts, an associate professor of information studies at UCLA and author of the forthcoming Behind the Screen: Digitally Laboring in Social Media’s Shadow World, is one of the few academics that studies commercial content moderation closely. She has conducted research in the Philippines on the people doing content moderation there as well as in the US. She paints a different, darker, picture from Microsoft and Nigam, based on what her research has shown. She believes the Microsoft suit represents a potential turning point in how content moderators are treated, because this is the first time that in-house employees, not part-time contractors, have brought a suit against a prominent ISP.

Soto’s claims regarding triggering and the images it summons echoes what Roberts has encountered in her work. “The way that people have put it to me over the years is that everybody has ‘the thing’ they can’t deal with,” she says “Everyone has ‘the thing’ that takes them to a bad place or essentially disables them.”

Roberts cannot name the firms she has worked with, because of confidentiality agreements, but emphasized the people that reach out to her are from large American content platforms.

“Everyone has ‘the thing’ that takes them to a bad place or essentially disables them,” says one expert.

One person who spoke to Roberts told her he just couldn’t hear any more videos of people screaming in pain, that the audio was the thing he couldn’t take. The method of splitting audio and video when reviewing materials to make the scene less realistic is a fairly common practice, one used by Microsoft. “People have started to develop all sorts of strange reactions to things they didn’t even know existed,” Roberts says.

Work inevitably comes home with content moderators as well. “If this individual who couldn’t handle screaming went and saw a movie where a character was doing just that in the midst of a violent scene, it could trigger a variety of different responses psychologically and physiologically,” says Roberts.

Roberts has found even relatively mild reactions to content moderation content subjects’ lives in a number of ways. ways. People she has spoken to will admit they’ve been drinking more or have had problems feeling close to their partners in intimate moments because something would flash in front of their eyes. “I can’t imagine anyone who does this job and is able to just walk out at the end of their shift and just be done,” one moderator told Roberts. “You dwell on it, whether you want to or not.”

The effects of the work are felt even by those who don’t do it full time. When Jen King was a product manager for Yahoo from 2002 to 2004 she worked on antisocial behavior and content moderation. Part of her job was managing a team of full-time content moderators. She says there is an acute cost to doing this work.

“I was working directly with employees who were reviewing this stuff for us. It’s awful, horrible, and disgusting,” she says.

“I reviewed a lot of content myself and it had a personal effect on me. Not to the extent of someone that had to do it eight hours a day, but I had to do it enough, where I did start to question elements of my sanity after a while. You see enough child porn and you’re just like, holy crap. It haunted me for some time after I left my job.” King says. “The kind of things you hear about with PTSD – sometimes I’d randomly remember some of the worst images I viewed and the feelings I’d had as I initially looked at them, the fear, disgust and horror. For some time I feared the memories would never go away.”

“You dwell on it,” one moderator said of watching disturbing content. “Whether you want to or not.”

King was managing the development of a tool to review and identify child pornography, so while she didn’t watch content frequently, she ended up viewing dozens of hours of content over the course of months. Meanwhile “the customer service folks put in full eight-hour days; for most this was their primary task,” she says.

She went to management and tried to get them to provide additional levels of support to her team, but according to her, the response was “crickets.” King says Yahoo wanted to engage with this stuff only to the extent so that they weren’t criminally liable for it. “It just wasn’t a priority,” she says.

Yahoo doesn’t explicitly deny these claims but says the responsibility to remove child pornography from their platforms isn’t one they take lightly.

“Just as important is the health and welfare of the Yahoo employees exposed to this difficult and challenging material,” says a Yahoo spokesperson. “Supporting these employees is a priority for us, and we continue to strengthen and develop the resilience and wellness programs available to them.”

In Roberts’ experience, there are a few common practices when it comes to content moderation and how companies support the employees doing it. “In the cases of these large, powerful and high-value platforms, content moderation is a fundamental part of the business that they do,” she says. “But it has been far outpaced by other innovations within the firms.” In other words, while this work is inextricable from large platforms, most invest their time and energy elsewhere rather than focus on a cutting-edge wellness program, or further exploring the impact this work has on people.

Due to employee non-disclosure agreements and a lack of external evaluation and verification mechanisms, it’s unclear how employees interact with support systems and the full extent of what these support systems look like in practice. While Microsoft laid out the specifics of their support system, YouTube and Facebook did not. Multiple employees from content platforms declined interview requests.

When it comes to a real-world comparison that mirrors the toll this job takes on the people that do it, “first responders are the closest parallel,” says Nigam. These are the emergency workers and law enforcement officials that are the first to show up to a murder or an incident of child abuse, for example.

“First responders are the closest parallel” to content moderators says one expert.

Moderators are often the first line of defence for reporting and responding to various crimes playing out online, whether video or photos are uploaded or happening in real time. As they look through flagged content, moderators might see similar things as first responders. “That’s not something anyone could have ever imagined, but it’s a reality that these are the first responders of the Internet,” says Nigam.

Rather than thinking about where companies fail to support content moderators or if the psychological toll is an inextricable part of the work, Nigam believes we should instead be focusing on giving moderators more robust care, and giving more recognition to the importance of moderators for the work they do. He also believes we should train them similarly to how we train law enforcement.

The effect of this kind of work, while not studied extensively for content moderators, has been identified in similar occupations. In the Employee Resilience Handbook put together by the Technology Coalition, a group of companies dedicated to eradicating online child sexual exploitation and sharing support mechanisms for employees such as content moderators, a number of disturbing impacts are documented.

One study from the National Crime Squad in the United Kingdom found that “76% of law enforcement officers surveyed reported feeling emotional distress in response to exposure to child abuse on the Internet.” In another, 28 law enforcement officers were examined, and found “greater exposure to disturbing media was related to higher levels of secondary traumatic stress disorder (STSD) and cynicism” as well as “substantial percentages of investigators” who “reported poor psychological well-being”.” Finally, according to a recent study from the Eyewitness Media Hub which looked at the effect of “eyewitness media” such as suicide bombing videos on the journalists and human rights workers who viewed it, found that “40 percent of survey respondents said that viewing distressing eyewitness media has had a negative impact on their personal lives.”

The Employee Resilience Handbook also identifies ways to support employees who do this work. Numerous ISPs and content platforms have signed on to the Technology Coalition, such as Apple, Facebook, Google, and Yahoo. But there is no independent body that verifies the implementation of support systems or even governmental regulations that mandate certain standards of support for employees in this area of work. Everything we know largely relies solely on companies self-reporting their practices. Additionally, there is no database or federal agency tracking content moderators after they leave their jobs, so the ongoing effects of their work are not officially documented. While the U.S.’s National Center for Missing and Exploited Children (NCMEC) is one of the few has such monitoring for its employees, the policy is not standard in the industry. And while NCMEC liaisons with companies regularly they say, they can’t speak to the specific practices companies engage in.

While Nigam thinks there is a safe way to do this work, and most companies have robust wellness programs, he doesn’t believe that even the most vigorous support systems for ISPs and content platforms give employees the same level of psychological support we afford or expect for law enforcement, the closest parallel to content moderators’ jobs when it comes to what they’re seeing.

We need to be “learning from police departments on how officers are trained, vetted, identified and modeling those training practices,” he says. “I think that needs to happen now, not tomorrow, not next year, but now.”

Facebook has recently announced it will be hiring 3,000 new content moderators to address the string of violence and death on its platform. There is currently a Public Content Contractor role listed on its careers page, but the the role description is vague and makes no mention about the type of content that will be reviewed. The sole job responsibility listed is “content review and classification” and required skills include the “ability to stay motivated while performing repetitive work” and be “highly motivated and hard-working with the ability to think clearly under pressure, both individually and in a team environment.” The role is a contractor position.