On Tuesday night, Twitter announced that it would be removing 7,000 QAnon accounts on the grounds that they had “engaged in violations of our multi-account policy, coordinating abuse around individual victims, or are attempting to evade a previous suspension.” The platform also announced that it would be preventing URLs associated with QAnon from being shared on the platform as well as banning content associated with QAnon from appearing in its Trending section.

Due to its comparatively relaxed content guidelines, Twitter has long served as one of the primary hubs of the QAnon movement, which is centered on the belief that left-wing public figures are involved in a Deep State conspiracy. Many experts following the movement applauded Twitter’s decision to crack down. “Twitter has changed and they do seem to be taking more of a leadership position in terms of moderating content and better enforcing terms of use policies and I applaud them for it,” says Kathleen Stansberry, assistant professor of media analytics at Elon University.

Others, however, were more skeptical about the efficacy of the move, suggesting the movement has perhaps grown too big to adequately tamp down. “QAnon is not an individual. It’s a global decentralized movement and it’s found a voice in the halls of power,” says Marc-Andre Argentino, a Ph.D. candidate and public scholar at Concordia University studying extremism, religion, and technology. He also cited the emergence of QAnon-supporting congressional candidates. Curbing such a wide-ranging and rapidly growing movement in the long-term, he warns, may prove to be “immensely difficult.”

To those closely following the movement, which has exploded in popularity in recent years, the impetus behind Twitter’s actions seemed to be the increasing visibility of the movement in the mainstream media. In particular, Chrissy Teigen, who has long been the subject of QAnon conspiracy theories, made news when she announced she would be setting her account to private to stave off harassment. “She was perhaps the biggest voice in the problem of QAnon-targeted harassment and shortly afterward they decided to take this action,” says Travis View, cohost of the QAnon Anonymous podcast. (A Twitter spokesperson told Rolling Stone that the update was not in response to the harassment of Teigen, and that it had been tracking coordinated QAnon-related harassment efforts for some time.)

QAnon was also instrumental in promoting the Wayfair child trafficking conspiracy theory, which went viral on Twitter last week. But, generally speaking, QAnon has been gathering steam since the very start of lockdown in mid-March, and activity on Twitter has gone up nearly 85%, according to Argentino. “Conspiracy theories are a way for people to try to provide answers for a problem of evil and project their insecurities onto this larger evil force,” he explains. “The pandemic has caused economic, political and health-related insecurities. That, combined with the fact that people have more time to be online, has created a very fertile environment for conspiracy theories.”

A growing number of congressional candidates have also embraced the movement, prompting widespread concern about its mainstreaming. President Trump has also retweeted or quote-tweeted QAnon content an estimated 180 times, although he has not explicitly promoted the movement.

In response to Twitter’s most recent action against QAnon, many far-right or QAnon-adjacent figures have come out against the platform, claiming it’s hampering free speech; numerous figures also pointed out that “Antifa,” a common straw man for the right, has not been subject to such censure. This argument may be effective at galvanizing the right, but it doesn’t hold much water, says Stansberry: “Twitter is a private company and if you don’t adhere to their terms of use you shouldn’t be able to use it, just like you can’t go into a store and start screaming profanities and expect not be told to leave,” she says.

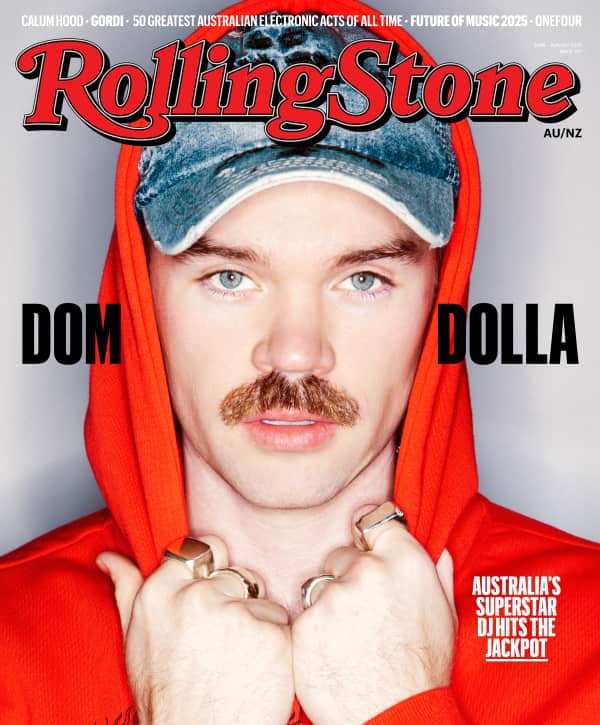

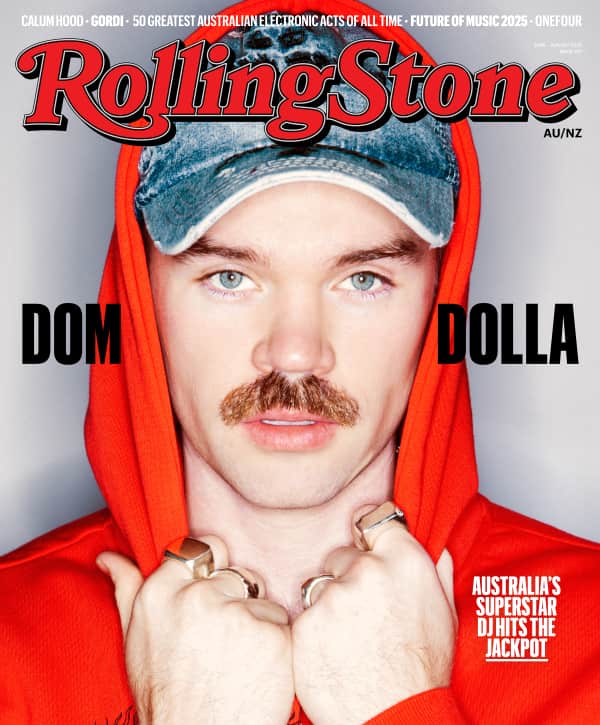

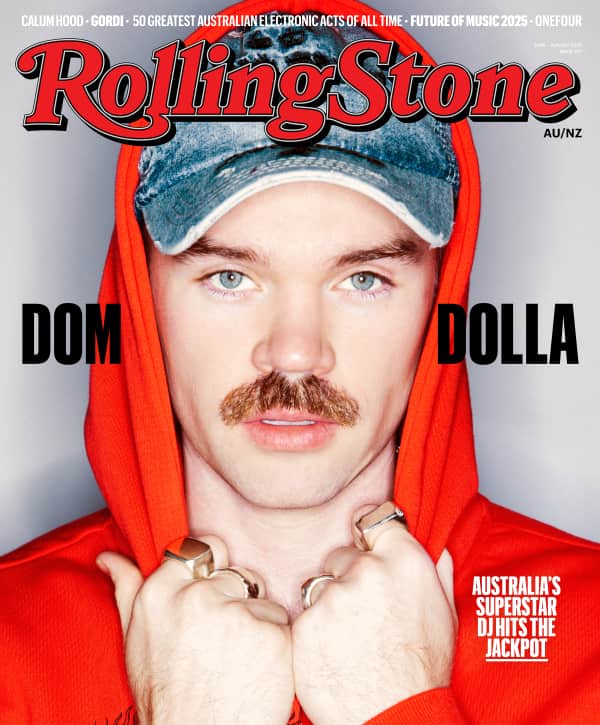

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

But Twitter’s actions against QAnon have also had the unintended effect of reinforcing conspiracy theorists’ belief that they are being targeted or silenced by a giant tech conglomerate. “This makes them feel emboldened,” View says. “It sort of confirms their ideological presuppositions that they are speaking such frightening important truths that threaten the establishment that a powerful entity like Twitter has to crack down on them. They believe they are engaging in an information war and they’re not ready to leave the battlefield.”

And indeed, many QAnon supporters have attempted to operate on Twitter unnoticed in unintentionally comical ways, such as using the number “17” (a reference to Q, the 17th letter of the alphabet) or making reference to “CueAnon” instead of QAnon.

Others are already preparing to abandon the platform for smaller yet more permissive ones, such as Parler (which trended on Tuesday night), Telegram, or Voat. TikTok has also emerged in recent months as a potential new host for QAnon, particularly among younger supporters, for whom referencing the movement is “like a social media cheat code,” View says. “It’s an easy way to get a ton of attention very quickly.”

Ostensibly, this is how de-platforming is supposed to work: If individuals or groups promoting toxic ideas are no longer permitted on a large mainstream platform, then they’ll be forced to migrate to smaller and more insular ones, where they’ll be unable to amass a new audience or recruit new members. But there also may be a terrifying side effect to the de-platforming of QAnon specifically, says Argentino: increased radicalization. On platforms like Telegram, for instance, there has been some recent overlap between QAnon groups and other, even more extremist far-right sects. “Some individuals who are recently disenfranchised may be more likely to find [more extremist ideas] attractive,” he says.

It’s in part for this reason (as well as the fact that other platforms, like Facebook, TikTok, and YouTube, have yet to institute similar policies) that Argentino feels that Twitter’s recent de-platforming efforts may not be as effective as one would hope. “There’s such a diverse and active community, that censorship may not be the way to deal with them,” he says. “It’s good short-term PR for Twitter…but in the long run, you still have a large pool of individuals committed to this who will be put on alternative platforms without community guidelines or counterforces to un-pill these individuals.”

At the end of the day, conspiracy theories and their resulting communities are “like a hydra,” says Stansberry. “You cut off one head, more heads grow. And you can make it stronger.”