The drones fell out of the sky over China Lake, California, like a colony of bats fleeing a cave in the night.Over 100 of them dropped from the bellies of three Boeing F/A-18 Super Hornet fighter jets, their sharp angles cutting across the clear blue sky. As they encircled their target, the mechanical whir of their flight sounded like screaming.

This was the world’s largest micro-drone swarm test. Conducted in October 2016 by the Department of Defense’s Strategic Capabilities Office and the Navy’s Air Systems Command, the test was the latest step in what could be termed a swarm-drone arms race. China had previously been ahead when they tested a swarm of 67 drones flying together – calling their technology the “top in the world” – but with the latest test of 103 drones, the United States was once again ahead.What made this test intriguing, however, was the drones themselves – and what they might mean for the future of warfare.

Drones have come a long way from their initial uses for surveillance. On February 4th, 2002, in the Paktia province of Afghanistan near the city of Khost, the CIA used an unmanned Predator drone in a strike for the first time. The target was Osama bin Laden. Though he turned out not to be there, the strike killed three men nonetheless. The CIA had used drones for surveillance before, but not in military operations, and not to kill. What had once merely been a flying camera in the sky was now weaponised.

In the following years, the Bush administration authorised 50 drone strikes, while the Obama administration greatly expanded the program, authorising 506 strikes over his tenure. These were almost exclusively Predator and Reaper drones, piloted by a human hundreds of miles away in American military bases, interfacing with systems that resembled playing a video game more than flying a combat fighter. The pilots were so far removed from the killings that the military itself had a term for a drone kill – “bug splat.”

Pilot Mark Bernhardt keeps an eye a Predator unmanned drone from his chase plane as they fly over Victorville, CA, on January 9, 2010.

As we enter the Trump era, the military’s reliance on drone warfare isn’t going anywhere. The weekend that President Trump took office, three authorised U.S. drone strikes took place in Yemen, targeting alleged members of al-Qaeda. Thirty people were killed, including 10 women and children. One of the children killed was the eight-year-old daughter of Anwar Awlaki, the American imam who joined Al Qaeda in Yemen and who himself was killed by a U.S. drone strike in 2011.

Since the first attacks, the military has continued to expand drone capabilities and integrate them into their operations – and swarm drones represent a big shift forward. A single Predator or Reaper drone will generally have multiple people involved with its flight and decision-making process. Not only do these drones require maintenance crews to repair it, each drone needs a human pilot on the ground to fly it. Perdix drones, on the other hand, communicate autonomously with each other and use collective decision making to coordinate movements, finding the best way to get to a target, even flying in formation and healing themselves – all without a human telling them how. While a single person gives them a task – for example, “go to the local hospital” or “encircle the blue pickup truck” – the drones decide autonomously what the best way to carry it out is, without human direction.

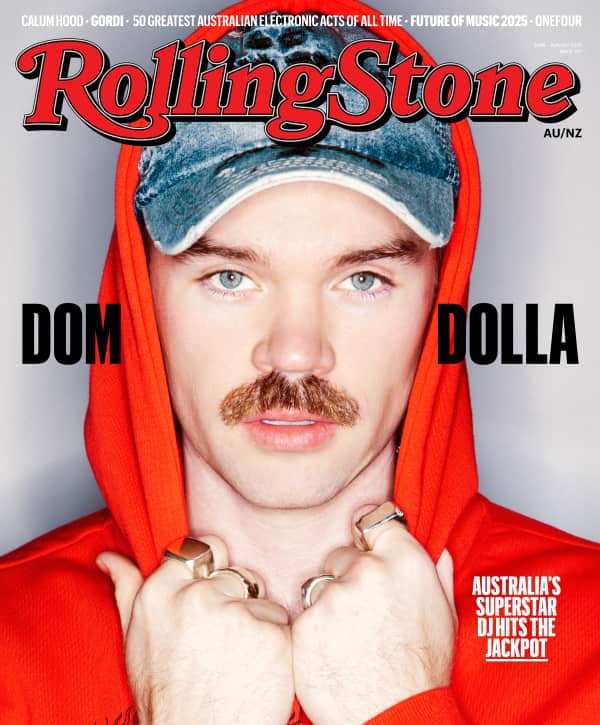

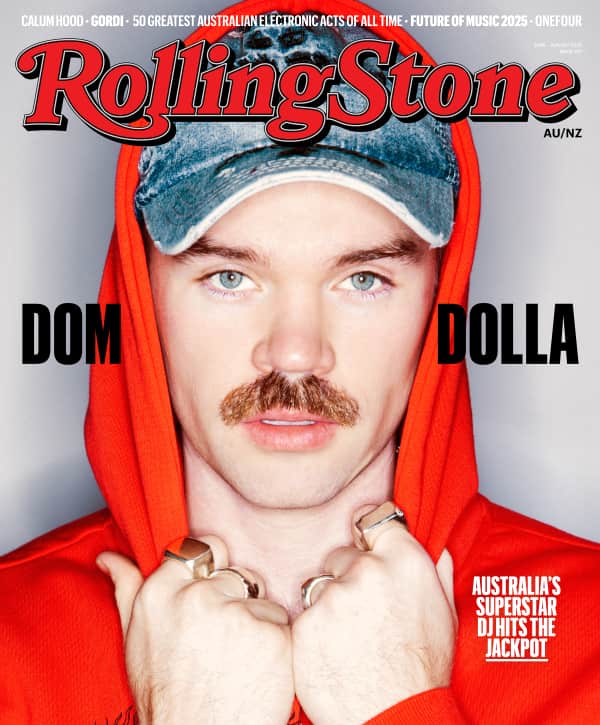

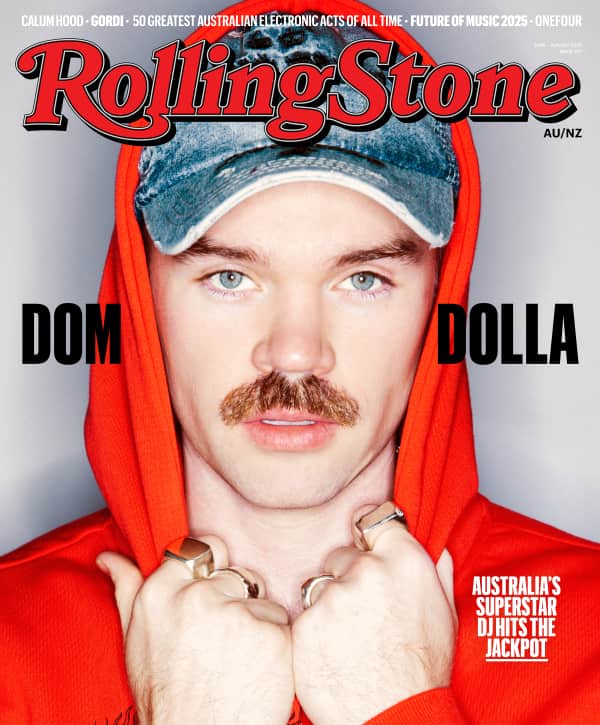

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

What is too far when it comes to developing technology that might be capable of making life and death decisions?

And while the China Lake swarm drone test represents a new way to conceive of drones, it’s not just researchers at the SCO and Navy who are looking to expand their use of swarm drone technology. Just last May, the Air Force released its flight plan for small unmanned aerial devices over the next 20 years, outlining how they’d begin to integrate drones and find more ways to use them in their existing projects. The military also is currently exploring ways to embed this technology in conventional military forces such as supply trucks crossing the desert or vessels patrolling a foreign coast. In 2014, the Navy successfully tested autonomous swarm boats, with their technology allowing unmanned vehicles to “not only protect Navy ships, but also, for the first time, autonomously ‘swarm’ offensively on hostile vessels.” At one point during the test, as many as 13 boats operated autonomously and coordinated their movements, all without a sailor actually at controls.

As these various paths of technological development are pursued, there’s one question that lingers over them all: How should we govern the autonomous weapons of the future? What is too far when it comes to developing technology that might be capable of making life and death decisions? In a 2015 open letter on autonomous weapons from the Future of Life Institute, artificial intelligence and robotics researchers including Elon Musk and Stephen Hawking warned that, with these technologies, “the stakes are high: autonomous weapons have been described as the third revolution in warfare, after gunpowder and nuclear arms.”

The U.S. attempt to set limits on the development of autonomous weapons centers on Directive 3000.09, which governs the DOD’s policies around lethal autonomous weapons. The military directive, which was enacted in 2012, in essence gives the green light to some autonomous projects while determining that other projects need to go through an extensive testing and review process. While it might be convenient to imagine this code as the US saying it would never allow autonomous technology to make a life or death decision without a human being involved that’s not the case, according toPaul Scharre, a senior fellow and director of the Future of Warfare Initiative at the Center for a New American Security, who led the DOD working group that drafted the directive.

“It’s often characterised that way publicly, but that’s probably a misreading,” says Scharre, who explains that the directive sets out guidelines for technology developers, but doesn’t forbid anything. It does, he says, encourage a process of review as well as provide guidelines for allowing developers to be inventive, without worry they’re crossing a line. So while it doesn’t forbid developers from making autonomous weapons systems, it does set up regulations about what needs to go through extensive testing and vetting.

There is no international consensus governing how autonomous technology can be developed or weaponised.

Directive 3000.09 is set to expire later this year though when its five-year limit runs out. It will be up to Donald Trump to decide whether the U.S. continues along its current stance, or abandons the guidelines that the directive lays out. The early results are concerning. Trump’s fixation on the military, his proposal to increase the DOD’s budget at the expense of other federal agencies and his erratic approach to everything from nuclear weapons to NATO don’t point towards a nuanced approach to lethal autonomous weapons systems and their governance. If anything, his emphasis on “winning,” regardless of cost, could push him towards embracing a more wide open approach to these weapons’ development and use.

Countries like China and Russia continue to forge ahead with the development of drones and autonomous systems, for fear of falling behind others. And most countries actively engaged in the research and developments of these systems have not formulated policies or military doctrines akin to Directive 3000.09. There is, in fact, no international consensus governing how autonomous technology can be developed or weaponised. If Trump abandons Directive 3000.09, it will only add to this void.

Professor James Rogers, an associate lecturer at the University of York and author of the upcoming book Precision: A History of American Warfare, isn’t sure we are ready to face this future. “What happens when someone dies, and it’s an autonomous machine that got it wrong and killed them. Who is accountable for that?” he asks. “If it’s the coding that got it wrong, then is the coder responsible? It’s a hard one. It’s not a chain of responsibility that at that point you can really follow, and that’s the problem.”

The Micro UAV – Unmanned Aerial Vehicle – called Perdix is displayed at the Defense Advanced Research Projects Agency building on Friday, March 4, 2016, in Arlington, VA. The drones, usually deployed in swarms, are used as part of a Pentagon program called Skyborg that focuses heavily on innovating with existing equipment and weapons.

“In my mind, the next generation of a swarm drone is a cluster bomb drone,” says Rogers. Cluster bombs are munitions that contain multiple explosive submunitions – or clusterlets – and are dropped from aircrafts or fired from the ground or sea, before opening up in mid-air to release tens or hundreds clusterlets that explode on impact. Rogers says that the capabilities of this would be devastating, enabling simultaneous precision strikes on targets. Except instead of normal clusterlets, which just fall, these drones would instead navigate to a specific target once released.

As Rogers describes it, these “cluster drones” could link up in the air autonomously once dispersed from the cluster-bomb shell to maximize their payload, or they could immediately disperse to separate pre-set targets. “Each drone has a definitive amount of explosive attached to it,” he says. “Then, once the cluster bomb is dropped, these drones swarm out of it, find their specific target, hit it and explode.”

Drone cluster bombs are just one subset of what are known as loitering munitions, or unmanned aerial vehicles that are designed to blow up targets that are outside of the operator’s sight with an attached warhead or explosive.

Loitering munitions are equipped with ultra-high resolution and infrared cameras that make it possible for a soldier to keep the explosive in a holding pattern in the air while they identify and watch a target, before striking when the time is ideal.

Intended for use in congested urban warfare, “loitering” is perhaps the most innocuous term for these drones. They’re also known as “suicide drones” or “kamikaze drones” as they are programmed to explode on impact, with no intention of being recovered.

And the U.S. military doesn’t have a monopoly on these kinds of drones. “It’s not just the U.S. building loitering munitions,” says Dan Gettinger, the Co-Founder of the Center for the Study of the Drone at Bard College. “Places like Israel, China, South Korea, Turkey, Poland and Iran already have, or are looking to invest in, loitering munitions because they can be seen as an affordable alternative to a really expensive missile or drone.” This is because it’s essentially a cheaper missile, guided by the brain of a drone that allows visual monitoring, targeting and timing, without the expectation of getting it back.

As innovations such as swarm micro-drones and loitering munitions continue to advance and expand how governments and their constituencies think of drones, the technology has spread to both state and non-state actors, sometimes with deadly results.

James Beven, the Executive Director of Research at the Conflict Armament Research group (CAR), an organisation that identifies and tracks conventional weapons and ammunition in contemporary armed conflicts, has said that the use of drones by insurgent groups is a growing international security concern. With the large number of drones now available on commercial markets they’re making their way into the hands of non-state actors such as Hezbollah and ISIS. What once may have been a hypothetical no longer is.

“I see drones very much as the weapon of choice non state actors for the next ten years,” says Caroline Kennedy, professor of war studies at the University of Hull in the United Kingdom. “Just as improvised explosive devices (IEDs) dominated our thinking about terrorist attacks and on the battlefield, I think drones in combination with IEDs or using IEDs remotely will be what terrorist groups and insurgents utilize.” Instead of IEDs being buried on the side of the road, they’d be dropped from the sky by a low-tech drone.

Loitering munitions stay in a holding pattern in the air while they watch a target, before striking when the time is ideal.

Rogers, the University of York professor, brings up an incident last October, when Kurdish and French military personnel attempted to return an ISIS drone to their base to deconstruct it, only to have it explode en route. “They took it in with the intention of taking it apart to analyse the technology and, almost like a Trojan horse, the drone exploded,” he says. “The whole point of it was that it was booby-trapped.” The explosion killed two Kurdish fighters and injured two members of French Special Forces.

Despite clear dangers, P.W. Singer, a Strategist and Senior Fellow at the New America Foundation, says he doesn’t see the development of these technologies slowing. “We are going to have more robots taking on a greater number of tasks, and in many ways doing them in a smarter and more effective way than humans,” he says.

Countries and non-state actors are going to come into possession of greater numbers of weaponised drones, and they will start to shape the battlefield in new and dangerous ways. From cluster bomb drones, to swarm drones and kamikaze drones, is the world prepared, or even able, to confront the threats of drones like these, particularly as autonomous technology is further developed and integrated with them?

Autonomous drones will undoubtedly change how warfare is fought as they continue to be integrated into the military. The U.S. has already seen the massive impact drones can have on combat in places like Yemen, Pakistan and Afghanistan, but adding autonomous swarm drones and loitering munitions to the capabilities of our military represent a new horizon. As retired Gen. Stanley McChrystal said in an interview with Foreign Affairs, “To the United States, a drone strike seems to have very little risk and very little pain. At the receiving end, it feels like war. Americans have got to understand that.” This technology will change the human cost of warfare, pushing us to use drones for an increasing number of tasks the world may have once solely considered the purview of humans. In doing so it not only changes what the battlefield looks like, but also the calculation about whether to go to war in the first place.