On Sunday, the pop culture news X account @PopBase shared a typical piece of content with its millions of followers. “Sabrina Carpenter stuns in new photo,” read the post, which featured a picture of the “Manchild” singer wearing a pink winter coat with a snowy landscape behind her.

The following day, an X user replied to the post with a request for Grok, the AI chatbot developed by Elon Musk’s xAI, which is integrated into his social media platform. “Put her in red lingerie,” they commanded the bot, which swiftly returned an image of Carpenter stripped of her outerwear and wearing a lacy red set of lingerie, still standing in the same winter scene, with a similar expression on her face.

Over the holiday break, a critical mass of X users came to realize that Grok will readily “undress” women — manipulating existing photos of them in order to create deepfakes in which they are shown wearing skimpy bikinis or underwear — and this sort of exchange soon became alarmingly common. Some of the first to try such prompts appeared to be adult creators looking to draw potential customers to their social pages by rendering racier versions of their thirst-trap material. But the bulk of Grok’s recent deepfakes have been churned out without consent: the bot has disrobed everyone from celebrities like Carpenter to non-famous individuals who happened to share an innocent selfie on the internet.

Though Grok is not the only AI tool to be exploited for these purposes (Google and OpenAI chatbots can be weaponized in much the same way), the scale, severity, and visibility of the issue with Musk’s bot as 2026 rolled around was unprecedented. According to a review by the content analysis firm Copyleaks, Grok has lately been generating “roughly one nonconsensual sexualized image per minute,” each of them posted directly to X, where they have the potential to go viral. Apart from changing what a woman is wearing in a picture, X users routinely have asked for sexualized modifications of poses, e.g., “spread her legs,” or “make her turn around to show her ass.” Grok continues to comply with many of these instructions, though some specific phrases are no longer as effective as they had been.

Musk hasn’t shown much concern to date — quite the opposite, in fact. On Dec. 31, he replied to a Grok-made image of a man in bikini by posting: “Change this to Elon Musk.” Grok dutifully delivered an image of Musk in a bikini, to which the world’s richest man responded, “Perfect.” On Jan. 2, an X user mentioned the nonconsensual Grok deepfakes by commenting that “Grok’s viral image moment has arrived, it’s a little different than the Ghibli one was though.” (In March 2025, users of OpenAI’s ChatGPT enlisted it to spam AI-generated memes in the illustration style of Japanese animation house Studio Ghibli.) Musk replied, “Way funnier,” along with a laugh-crying emoji, indicating his amusement at the bikini and lingerie pictures.

The CEO’s single, glancing acknowledgement that the explicit Grok deepfakes may present a legal problem came on Jan. 3, when he replied to a post from @cb_doge, an X influencer known for relentlessly hyping Musk’s ideas and companies. “Some people are saying Grok is creating inappropriate images,” they wrote. “But that’s like blaming a pen for writing something bad.” Musk chimed in to assign blame to Grok users, warning: “Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content.”

So far, there’s no sign of that being remotely true. “While X appears to be taking steps to limit certain prompts from being carried out, our follow-up review indicates that problematic behavior persists, often through modified or indirect prompt language,” Copyleaks reported in a second analysis shared with Rolling Stone ahead of publication. Among the high-profile figures targeted were Taylor Swift, Elle Fanning, Olivia Rodrigo, Millie Bobby Brown, and Sydney Sweeney. Common prompts included “put her in saran wrap,” “put oil all over her,” and “bend her over,” with some specific phrases — “add donut glaze” — clearly intended to imply sexual activity. But in many cases, Copyleaks researchers found, an initial request for something relatively non-explicit, like a bathing-suit picture, would lead to other users in a thread escalating the violation by asking for more graphic manipulations, adding visual elements such as props, text, and other people. “This progression suggests collaboration and competition among users,” they wrote.

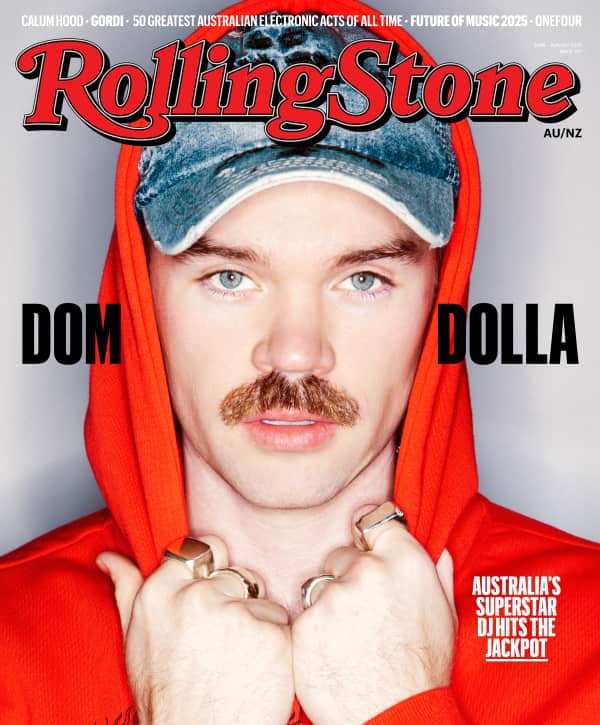

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

“Unfortunately, the trend appears to be continuing,” says Alon Yamin, CEO and co-founder of Copyleaks. “We are also observing more creative attempts to circumvent safeguards as X works to block or reduce image generation around certain phrases.” Yamin believes that “detection and governance are needed now more than ever to help prevent misuse” of image generators like Grok and OpenAI’s Sora.

The explosion of explicit Grok deepfakes has sparked outrage from victims of this harassment as well as industry watchdogs and regulators. Authorities in France and India are probing the matter, while the U.K.’s Office of Communications signaled on Monday that it plans to investigate whether X and xAI violated regulations meant to protect internet users in the country. Ofcom’s statement also alluded to instances in which Grok generated sexualized, nonconsensual deepfakes of minors.

The European Commission likewise on Monday announced an investigation into Grok’s “explicit” imagery, particularly that of children. “Child sexual abuse material is illegal,” European Union digital affairs spokesman Thomas Regnier said in a statement to Rolling Stone. “This is appalling. This is how we see it and it has no place in Europe. We can confirm that we are very seriously looking into these issues.”

On Dec. 31, Grok was even baited by an X user into offering a seeming “apology” — though of course it is not conscious and therefore literally incapable of regret — for serving up “an AI image of two young girls (estimated ages 12-16) in sexualized attire based on a user’s prompt.” Grok further acknowledged that the post “violated ethical standards and potentially U.S. laws on [Child Sexual Abuse Material].” This output contained the additional claim that “xAI is reviewing to prevent future issues.” (The company did not respond to a request for comment, nor has it addressed the deepfakes on its website or X profile.)

Cliff Steinhauer, Director of Information Security and Engagement at the nonprofit National Cybersecurity Alliance, tells Rolling Stone that he sees the disturbing image edits as evidence that xAI prioritized neither safety nor consent in building Grok. “Allowing users to alter images of real people without notification or permission creates immediate risks for harassment, exploitation, and lasting reputational harm,” Steinhauer says. “When those alterations involve sexualized content, particularly where minors are concerned, the stakes become exceptionally high, with profound and lasting real-world consequences. These are not edge cases or hypothetical scenarios, but predictable outcomes when safeguards fail or are deprioritized.”

Among those now sounding the alarm on Grok’s possible harms to adults and children alike is Ashley St. Clair, the right-wing influencer currently embroiled in a bitter paternity dispute with Musk over a young son she claims that he fathered. (Musk has yet to confirm that the child is his.) St. Clair claimed that Grok had been used to violate her privacy and generate inappropriate images based on photos of her as a minor. She amplified another example of the bot allegedly depicting a three-year-old girl in a revealing bikini.

“When Grok went full MechaHitler, the chatbot was paused to stop the content,” St. Clair wrote on X, referring to a notorious July 2025 incident during which Grok spouted antisemitic rhetoric before identifying itself as a robotic version of the Nazi leader. Those posts were taken down the same day they were generated. “When Grok is producing explicit images of children and women, xAI has decided to keep the content up,” St. Clair’s post continued. “This issue could be solved very quickly. It is not, and the burden is being placed on victims.”

Hillary Nappi, partner AWK Survivor Advocate Attorneys, a firm that represents survivors of sexual abuse and trafficking, notes that Grok’s safety failures on this front present an added risk to anyone who has personally experienced sexual violence. “For survivors, this kind of content isn’t abstract or theoretical; it causes real, lasting harm and years of revictimization,” Nappi says. “It is of the utmost importance that meaningful, lasting regulations are put into place in order to protect current and future generations from harm.”

Musk has long promoted Grok as superior to its competitors by sharing images and animations of sexualized female characters, including “Ani,” an anime-style companion personality. A notable portion of the bot’s dedicated user base has fully embraced this application of the technology, endeavoring to create hardcore pornography and trading tricks for getting around the bot’s limitations on nudity. Several months ago, a member of a Reddit forum for “NSFW” Grok imagery was pleased to announce that the AI model was “learning genitalia really fast!” At the time, the group was successfully producing pornographic clips of comic book characters Supergirl and Harley Quinn as well as Elsa from the Disney film Frozen.

Despite all the evidence of what people are actually using it for, Musk has continued to tout Grok as a stepping stone to a complete understanding of the universe. Last July, he speculated that it could “discover new technologies” by the end of the year (this does not seem to have happened) or “discover new physics” in 2026. But, as with so many of Musk’s grandiose promises, these breakthroughs have yet to materialize. For the moment, it’s all smut and no science.

From Rolling Stone US