Some of the misinformation propagated on social media is promoted by people who genuinely believe it to be true. But much of it isn’t promoted by people at all, but by automated accounts, or bots. This is confirmed by a new study from Carnegie Mellon University’s Center for Informed Democracy & Social Cybersecurity, which found that a whopping 45% to 60% of accounts on Twitter promoting misinformation related to coronavirus were bots.

As part of the study, researchers analyzed nearly 200 million tweets since January referring to the coronavirus. It looked for specific markers that typically identify bots, such as whether they tweet multiple times in a short period, or copy-paste text from other tweets. The team found more than 100 false narratives and conspiracy theories related to COVID-19 that were perpetuated by bots, such as the idea that 5G wireless towers are spreading the virus, or that the virus was created in a lab in Wuhan, China.

The team did not identify where, exactly, these bots were coming from, but they did find that they accounted for about 82% of the top 50 most influential retweeters. “We’re seeing up to two times as much bot activity as we’d predicted based on previous natural disasters, crises, and elections,” Carley told NPR.

In itself, it isn’t exactly surprising that bots are leading the charge in promoting disinformation related to COVID-19. It is not uncommon for bots created by organized disinformation campaigns to flood platforms during moments of great change or instability, such as U.S. or foreign elections or natural disasters, as we saw with Russia’s interference in the 2016 election. Back in March, Reuters also reported on a European Union document outlining “a significant disinformation campaign by Russian state media and pro-Kremlin outlets regarding COVID-19” to sow panic and instability in Western countries, an allegation the Kremlin denied.

What is surprising is the degree to which bots are flooding the platforms: As Carley told MIT Technology Review, typically bots comprise about 10% to 20% of the disinformation campaign activity. The COVID-19 pandemic appears to pose a unique opportunity for bad actors to spread false information. As Rolling Stone has previously reported, platforms have struggled to curb COVID-19 conspiracy theories, despite adopting policies that would ostensibly prohibit the spread of misleading or potentially dangerous medical information.

In a statement, a spokesperson for Twitter pointed to a company blog post disputing that all bots were created to sow misinformation and said that “the term is used to mischaracterize accounts” that do not fall in that category. “What’s more important to focus on in 2020 is the holistic behavior of an account, not just whether it’s automated or not. That’s why calls for bot labeling don’t capture the problem we’re trying to solve and the errors we could make to real people that need our service to make their voice heard,” the spokesperson said.

The spokesperson added that it has removed more than 2,600 tweets related to COVID-19 and challenged more than 4.3 million accounts “which were targeting discussions around COVID-19 with spammy or manipulative behaviors,” though it stated that “we will not take enforcement action on every Tweet that contains incomplete or disputed information about COVID-19.”

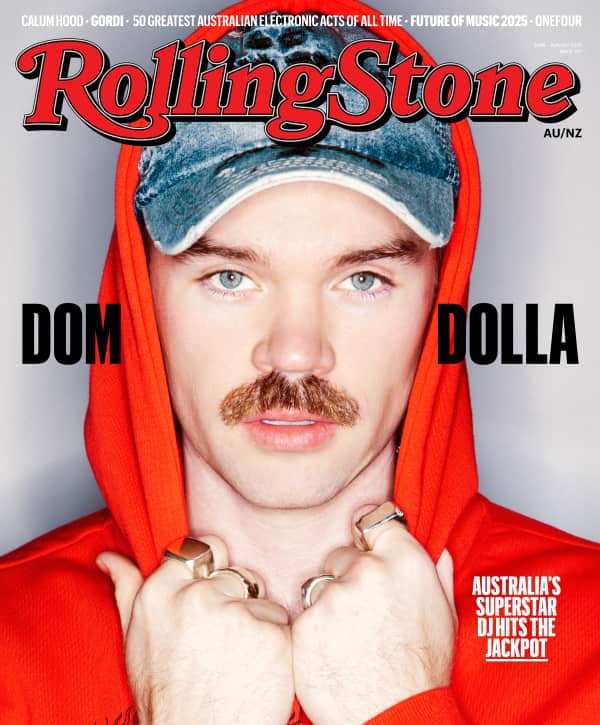

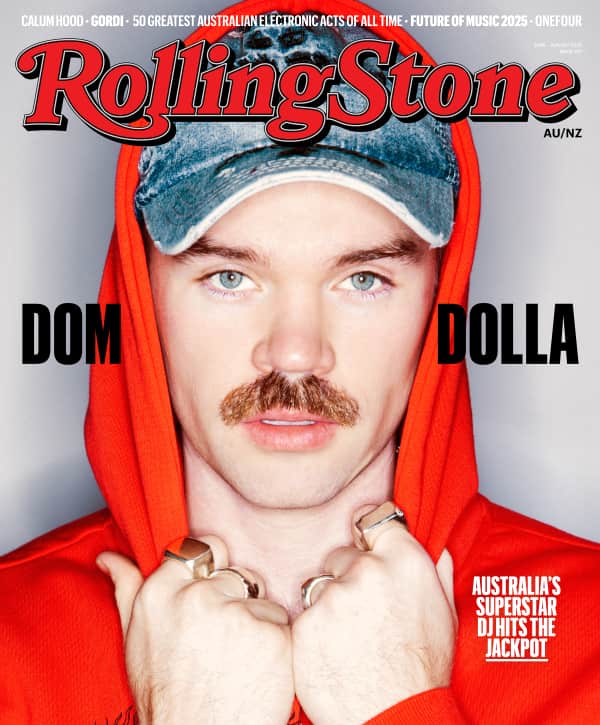

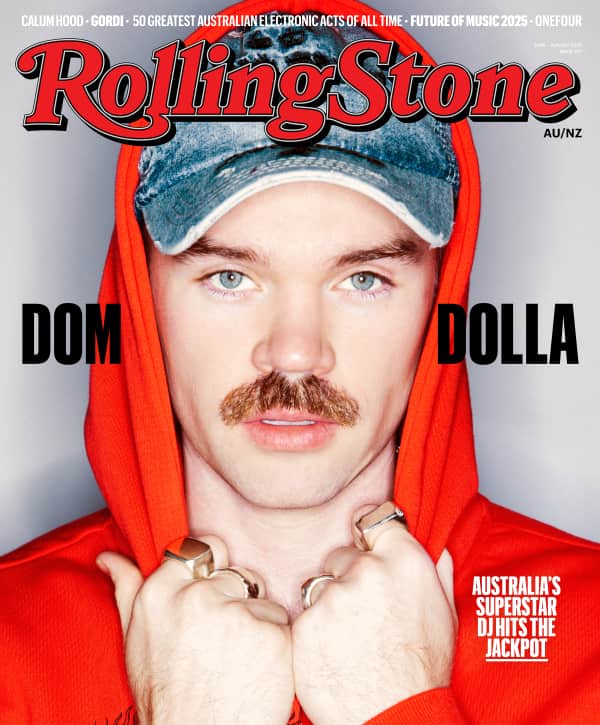

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.