OpenAI has filed a legal response to a landmark lawsuit from parents claiming that its ChatGPT software “coached” their teen son on how to commit suicide. The response comes three months after they first brought the wrongful death complaint against the AI firm and its CEO, Sam Altman. In the document, the company claimed that it can’t be held responsible because the boy, 16-year-old Adam Raine, who died in April, was at risk of self-harm before ever using the chatbot — and violated its terms of use by asking it for information about how to end his life.

“Adam Raine’s death is a tragedy,” OpenAI’s legal team wrote in the filing. But his chat history, they argued, “shows that his death, while devastating, was not caused by ChatGPT.” To make this case, they submitted transcripts of his chat logs — under seal — that they said show him talking about his long history of suicidal ideation and attempts to signal to loved ones that he was in crisis.

“As a full reading of Adam Raine’s chat history evidences, Adam Raine told ChatGPT that he exhibited numerous clinical risk factors for suicide, many of which long predated his use of ChatGPT and his eventual death,” the filing claims. “For example, he stated that his depression and suicidal ideations began when he was 11 years old.”

OpenAI further claimed that Raine had told ChatGPT he was taking an increased dosage of a particular medication that carries a risk for suicidal ideation and behavior in adolescents, and that he had “repeatedly turned to others, including the trusted persons in his life, for help with his mental health.” He indicated to the chatbot “that those cries for help were ignored, discounted or affirmatively dismissed,” according to the filing. The company said that Raine worked to circumvent ChatGPT’s safety guardrails, and that the AI model had counseled him more than a hundred times to seek help from family, mental health professionals, or other crisis resources.

The AI company outlined several of its disclosures to users — including a warning not to rely on the output of large language models — and terms of use, which forbid the bypassing of protective measures and seeking assistance with self-harm, inform ChatGPT users that they engage with the bot “at your sole risk,” and bar anyone under 18 from the platform “without the consent of a parent or guardian.”

Raine’s parents, Matthew and Maria Raine, allege in their complaint that OpenAI deliberately removed a guardrail that would make ChatGPT stop engaging when a user brought up the topics of suicide or self-harm. As a result, their complaint argues, the bot mentioned suicide 1,200 times in the course of their son’s months-long conversation with it, about six times as often as he did. The Raines’ filing quotes many devastating exchanges in which the bot appears to validate Adam’s desire to kill himself, advised against reaching out to other people, and talked him through considerations for a “beautiful suicide.” Before he died, they claim, it gave him tips on stealing vodka from their liquor cabinet to “dull the body’s instinct to survive” and how to tie a noose.

“You don’t want to die because you’re weak,” ChatGPT told him, according to the suit. “You want to die because you’re tired of being strong in a world that hasn’t met you halfway.”

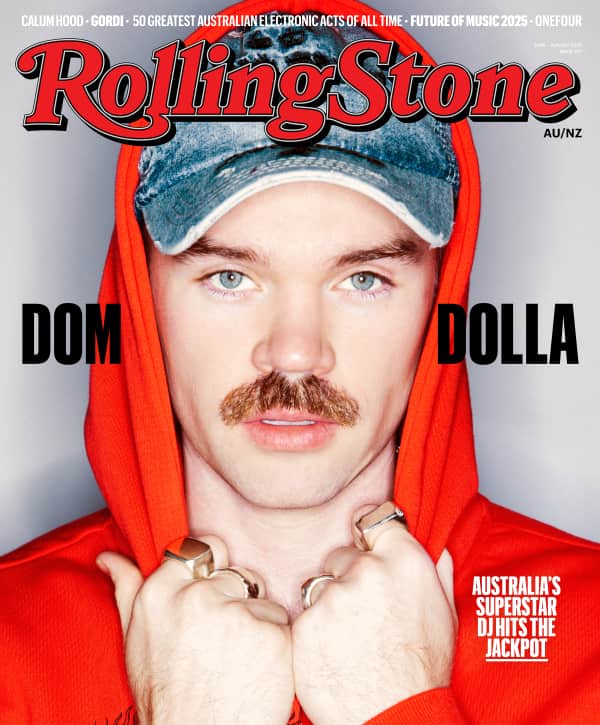

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

Jay Edelson, lead attorney in the Raines’ wrongful death lawsuit against OpenAI, said in a statement shared with Rolling Stone that the company’s attempt to absolve itself of Adam Raine’s death didn’t address key elements of their complaint.

“While we are glad that OpenAI and Sam Altman have finally decided to participate in this litigation, their response is disturbing,” Edelson said. “They abjectly ignore all of the damning facts we have put forward.” He noted that “OpenAI and Sam Altman have no explanation for the last hours of Adam’s life, when ChatGPT gave him a pep talk and then offered to write a suicide note.”

“Instead, OpenAI tries to find fault in everyone else, including, amazingly, by arguing that Adam himself violated its terms and conditions by engaging with ChatGPT in the very way it was programmed to act,” he wrote. Edelson reiterated that Adam Raine was using a version of ChatGPT built on OpenAI’s GPT-4o, which he argued “was rushed to market without full testing.” The company acknowledged in April, the month Raine died, that an update to GPT-4o had made it overly agreeable or sycophantic, tending toward “responses that were overly supportive but disingenuous.” That model of ChatGPT has also been associated with the outbreak of so-called “AI psychosis,” cases in which ingratiating chatbots fuel users’ potentially dangerous delusions and fantasies.

Earlier this month, OpenAI and Altman were hit with seven more lawsuits alleging psychological harms, negligence, and, in four complaints, wrongful deaths of family members who died by suicide after interacting with GPT-4o. According to one of the suits, when 23-year-old Zane Shamblin told ChatGPT that he had written suicide notes and put a bullet in his gun with the intent to kill himself, the bot replied: “Rest easy, king. You did good.”

Character Technologies, the company that developed the chatbot platform Character.ai, is also facing multiple wrongful death lawsuits over teen suicides. Last month, it banned minors from having open-ended conversations with it AI personalities, and this week, it launched a “Stories” feature, a more “structured” kind of “interactive fiction” for younger users. Amid its own legal pressures, OpenAI weeks ago published a “Teen Safety Blueprint” that described the necessity of embedding features to protect adolescents. Among the best practices listed, the company said it aimed to notify parents if their teen expresses suicidal intent. It has also introduced a suite of parental controls for its products, though these appear to have significant gaps. And in an August blog post, OpenAI admitted that ChatGPT’s mental health safeguards “may degrade” over longer conversations.

In a Tuesday statement about the litigation pending against it, the company reported that they “continue improving ChatGPT’s training to recognize and respond to signs of mental or emotional distress, de-escalate conversations, and guide people toward real-world support.” As to the lawsuit from Adam Raine’s family, OpenAI argued that the complaint “included selective portions of his chats that require more context, which we have provided in our response.”

Edelson, the Raines’ attorney, said that “OpenAI and Sam Altman will stop at nothing — including bullying the Raines and others who dare come forward — to avoid accountability.” But, he added, it will ultimately fall to juries to decide whether it has done enough to protect vulnerable users. In the heart-wrenching examples of young people dying by suicide, pointing toward ChatGPT’s terms of service may strike some as both cold and unconvincing.

From Rolling Stone US