For Benoit Carré, the future revealed itself in six notes. In 2015, Carré, a cerebral, bespectacled songwriter then in his mid-forties, became the artist-in-residence at Sony’s Paris-based Computer Science Laboratory, headed by his friend Francois Paçhet, a composer and leading artificial-intelligence researcher. Paçhet was developing some of the world’s most advanced AI-music composition tools, and wanted to put them to use. The duo’s first released project was the mostly AI-composed Beatles pastiche “Daddy’s Car,” which ended up making worldwide headlines in 2016 as a technological milestone. But Carré was looking for something deeper, something new.

“I’ve always been interested in music with unexpected chord changes, unexpected melodies,” Carré says. “I’ve always searched for that kind of surprise in my work, too. And that means that I need to lose control at some point.” Shortly after he finished work on “Daddy’s Car,” Carré sat in the lab one day and fed the sheet music for 470 different jazz standards into artificial-intelligence software called Flow Machines. As it started generating new compositions based on that input, one short melody transfixed Carré, staying in his head for days. That sequence of notes became the core of a genuinely odd, novel, haunting song, “Ballad of the Shadow,” and Carré decided the Flow Machines AI had fused with him into a new artist — a composite he named Skygge. The song became a track on the first-ever AI-composed album, 2018’s Hello World (credited to Skygge), which received respectful press, but didn’t make it past the arty fringes of pop culture.

Five years later, AI is the hottest topic in music, largely thanks to a much bigger song, and a very different use of AI. In 2022, a group of researchers began work on an open-source tool formally known as SoftVC VITS Singing Voice Conversion. Building on more primitive software that allowed users to generate raps in, say, Eminem’s voice by typing words into an interface, SVC allowed for transformation of one voice into another, to increasingly convincing effect. Cover songs using the tool were floating around TikTok by early 2023. But every new musical technology needs a breakthrough hit, and for AI voice-cloning, that arrived on April 4, 2023.

From the beginning, “Heart on My Sleeve,” a song by the anonymous songwriter Ghostwriter-977, featuring synthetic Drake and the Weeknd vocals, was misunderstood and overhyped. One of the tweets that helped propel its virality described the song as “AI-generated,” a wild mischaracterization that major news outlets helped spread. The rise of ChatGPT and image-generating tools like MidJourney seemingly led to the delusion that AI already had limitless capabilities, that perhaps Ghostwriter977 had typed a description of a Drake-Weeknd collab into some magical tool that spat out a finished song.

https://www.youtube.com/watch?v=7HZ2ie2ErFI

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

That tool doesn’t exist, and experts agree that “Heart on My Sleeve” was written, produced, and sung in its entirety by a human, with the only AI intervention arriving via the transformation of the vocals into Drake’s and the Weeknd’s voices. Generative AI may be able to produce convincing C+-level college essays and movie-poster-style artwork without human assistance, but full, convincing pop songs with vocals and lyrics? Still not possible, largely because of the sheer number of subtle intricacies in a piece of recorded music, from the underlying composition to vocal inflections down to, say, the reverb tail on the snare drum. “It is daunting,” says Paçhet, whose AI-music research dates back to the Nineties. “It’s incredible, the level of complexity.”

Many in the business found “Heart on My Sleeve” unimpressive — the Weeknd segments aren’t even convincing fakes — or even racist in its casual thievery of Black artists’ likenesses. But the opening faux-Drake verse was catchy enough, in a If You’re Reading This It’s Too Late vein, to cause a sensation as a TikTok snippet. The song garnered more than half a million streams before Universal Music managed to take it down, using a technicality. Ghostwriter977 foolishly used one of Metro Boomin’s producer tags (a copyright-protected snippet of Future rapping “If young Metro don’t trust you …”), which prevented the label from having to wade into the fraught and still-unsettled territory of whether the mere sound of a singer’s voice is copyrighted. (Ghostwriter977, who claimed to be an undercompensated professional songwriter, has since gone quiet, and after some initial interest in giving an interview for this article, stopped replying to emails.)

Since then, the music industry’s AI conversation has been almost entirely focused on voice-cloning, overshadowing, for now, the considerable threat and promise of other forms of machine-made music. Even the May release to beta testers of MusicLM, a shockingly sophisticated, though still rickety, tool by Google, went largely unnoticed. MusicLM generates audio files of instrumental musical snippets from written descriptions, like music criticism in reverse, or a baby version of the fictional song-generating tool people imagined in the hands of Ghostwriter977.

Meanwhile, “AI” has become an all-purpose, oft-confusing buzzword in music: When Paul McCartney announced in June that a final, “Free As A Bird”-style Beatles song was coming, using neural-net-powered software developed by Peter Jackson’s WETA to isolate John Lennon’s voice from a demo, countless news outlets ran controversy-stirring headlines about an “AI” song from the band. But versions of the same track-isolating technology have been around for years — Serato features a version of it with its latest DJ software, apps like Moises offer it, and Giles Martin used it on his Revolver remix last year — and it’s worlds away from generative AI.

Some see a world of possibilities and concern in voice-cloning technology alone, but Carré and Paçhet aren’t so sure. “It’s fun to make a song and to transfer your voice into Eminem’s timbre,” Carré says. “But for musicians, for creation, I don’t see what is interesting here.”

IT DIDN’T TAKE long for the amateur producers playing with voice-cloning to stumble upon one of its most enticing, if unnerving, uses: the resurrection of the dead. The unauthorized nature of the tech inevitably led users toward musical blasphemy, whether it was making the Notorious B.I.G. diss himself by taking over the vocals of Tupac Shakur’s “Hit Em’ Up,” or the aesthetic crime of forcing Kurt Cobain to sing songs by Seether. And then there’s Jeff Buckley singing Lana Del Rey, which turns out to be oddly compelling. No one seems to have tried doing much yet with the voice of Prince, who, before his death, called pre-AI posthumous manipulations “demonic.”

Joel Weinshanker, managing partner of Elvis Presley Enterprises, sees a business there. He’s not particularly interested in commissioning new AI-assisted Presley songs for commercial release, believing the result would lack any real soul. But if tracks along those lines do emerge, he’s more interested in finding a way to monetize them than in shutting them down. And what does excite him is something more prosaic: giving fans the ability to sing Elvis songs karaoke-style — with Elvis’ own voice. “We’re gonna be first in line for when the technology is there, and when the system is in place to compensate rights holders,” he says. “Every licensed karaoke machine is paying the intellectual-property owners. All this really is, is karaoke times 1,000.”

So far, only one major artist, living or dead, is allowing unlimited use of digital clones of her voice: Grimes. Following in the footsteps of the pioneering AI artist Holly Herndon, who has been offering up her voice for cloning since 2021, Grimes announced in April she would allow anyone to record songs with her voice, and split any revenue 50-50 with them. Her system, which has a dedicated website (Elf.tech), has led to several solid songs, but as even her own manager, Daouda Leonard, acknowledges, Grimes is in less danger than most big-name artists of compromising commercial viability or flooding the market — she’s never had a radio hit. “You don’t hear Grimes that much anyway,” Leonard says. (Carré gave in to the trend, recording a remake of the Skygge song “Ocean Noir” with Grimes’ voice — her first French-language release.)

Grimes is also casually espousing some radical positions, including a belief that copyright, in general, shouldn’t exist. That’s going too far even for some of the most adventurous AI-music enterprises, including the startup Uberduck, which at press time dared to give users easy access to models of famous voices from Ariana Grande to Drake to Justin Bieber — and ran a contest with a $10,000 prize for the best use of Grimes’ voice. “We are pursuing avenues of partnership with basically every major label,” says Uberduck’s 31-year-old founder, Zach Wener, “and we have no interest whatsoever in being the Napster of this movement. I’m only willing to go forth in this world if we can find a path amenable to, and profitable for, artists.” Uberduck has already received some legal threats, he acknowledges, and “we comply with all takedown notices.”

Universal Music Group has described AI music as “fraud” and said that it will “harm artists,” but for many in the industry, at least outside of major-label boardrooms, the idea of squashing voice-cloning seems as impossible as it was to shut down all of the file-sharing services of the early aughts. Instead, they envision a system of monitoring and monetization, ideally with the ability for artists to opt out. Rohan Paul, CEO of the startup Controlla, is one of many innovators working on algorithms to help labels track down, say, a Drake-voiced song that wasn’t labeled as such.

“It’ll be similar to the Napster era, where it gets to a point that we don’t have a choice,” says Paul. “It’s unfortunate. But I think five years from now, it’ll be so easy for anyone to steal someone else’s voice that there has to be a way to make it open season and monetize it. So, I think, we’ll see something like voice royalties.” There’s talk of compulsory licensing for voices, the same way anyone can cover a song without getting the writer’s permission. But it’s hard to see how that would play out in cases like the version of “America Has a Problem” floating around in which someone made an AI Ariana Grande sing a racial slur. Even Grimes has reserved the right to tank offensive content.

On the other hand, the lack of a viral follow-up to “Heart on My Sleeve” in the weeks that followed suggests that — as long as human talent is still needed to write songs — the threat of voice-cloning could’ve been exaggerated in a post-Ghostwriter977 panic. “I think people think it’s going to open door for random people at home to write songs and get them out,” says music manager Trevor Patterson, whose clients include JetsonMade. “And some may slip through the cracks. But if it becomes a thing, it’s gonna be the same songwriters who are dominating now, just using new tools to expand their craft.”

There’s a world where voice-cloned songs could stay mostly underground, as a fun, non-monetizable gimmick that could benefit from the hands-off approach labels had toward rap mixtapes. “Essentially, we’re giving the world the ability to create mixtapes,” says copyright attorney Ateara Garrison. Or maybe it’s even more faddish. “Voice substitution is likely going to play its course a little bit,” says Uberduck’s Wener. “I don’t think it’ll ever go away. But like mash-ups, it’ll probably have its heyday and then get old. But, you know, mash-ups still exist, right?”

Still, AI is moving quickly, and the most consequential use cases for voice-cloning may have not yet emerged. Already, online amateurs are replacing Paul McCartney’s more weathered older vocals on his latter-day songs with clones of his Beatles-era voice, with promising results. Tech-savvy fans have given the same rejuvenating treatment to recordings of Axl Rose’s recent live performances with Guns N’ Roses. Right now, there’s a slight delay in even the best processing scenario, but once that goes away, artists could start using AI clones of their own voices live onstage, as a sort of supercharged AutoTune.

In the studio, they could use Melodyne to tune their voice up to notes they can’t quite hit, and then bring in the vocal clone to smooth it out. Producers could fill in a missing phrase or two on days artists leave a bit too early, and songwriters are already talking about pitching songs to artists using their own voices. And who would really notice if producers buried layers of Brian Wilson’s and Marvin Gaye’s voices deep in their stacks of backing vocals?

There are even wilder possibilities, as Rob Abelow, founder of the consultancy firm Where Music’s Going, suggests: “What if, instead of creating a deepfake of somebody, they make the most beautiful synthetic voice from a combination of all these others?”

WELL BEFORE ANY flood of AI songs, the major labels saw reason to fear being drowned out. Back in January, Universal Music CEO Lucian Grainge noted that streaming services were seeing some 100,000 uploads a day, and that “consumers are increasingly being guided by algorithms to lower-quality functional content that in some cases can barely pass for ‘music.’ ” Anyone who’s heard the generic fodder on something like Spotify’s popular “Peaceful Piano” playlist — often music that Spotify conveniently doesn’t have to pay royalties for — would have to agree.

Humans, again, have already done a fine job of pumping out “lower-quality” content without AI assistance, but there’s one company that even some AI proponents have eyed with suspicion. Boomy, which lets users quickly and easily make simple songs on their phones with AI tools and upload them to streaming services, boasts on its website that its users have recorded “15,477,480 songs, around 14.7 percent of the world’s recorded music.” In May, Spotify pulled down some Boomy songs and temporarily barred the company from posting new songs, citing evidence of suspicious, artificial “streaming farm”-type activity. For some, that raised the specter of a dystopian future where computers streamed music made by computers, a perfect circle of inhumanity.

But Boomy’s CEO, Alex Mitchell, insists his company had nothing to do with the irregular streaming, notes that there’s a “heartbeat” — a human user — “behind every Boomy song,” and clarifies that only a “single-digit percentage” of those 15 million songs have made it to streaming services, since the company screens for quality. Still, he says, “whether anybody likes it or not, or whether Boomy is in this market or not, you have an inevitability that there will be millions of musicians and hundreds of millions, if not billions, of songs per day. More people are going to be making more music with AI tools as the barriers to entry to create and participate in the market have gone down dramatically.”

Boomy’s product, in any case, wasn’t exactly what Grainge was referring to. His concern was largely over “functional music” — sound meant for a purpose, often relaxation or studying. In its purest form, this content does transcend music altogether: “Clean white noise – Loopable with no fade,” from the album Best White Noise for Baby Sleep, has more than a billion plays on Spotify. The most sophisticated iteration of functional music may well be from Endel, which uses generative AI to spawn endless musical beds that the company says are scientifically designed to aid sleep, exercise, and other human necessities (there’s no bathroom or sex settings yet).

Endel has also worked with artists including James Blake to make versions of their music that self-generate via AI into functional soundscapes, and the company’s co-founder and CEO, Oleg Stavitsky, sees that technology as a way for labels to reclaim market share. “Lucien’s comment was, essentially, we’re losing the war to white noise,” Stavitsky says. “Naturally, labels’ market share is shrinking, because more and more people are turning to these types of sounds. Part of the problem is, yes, the DSPs are diverting listener attention to this type of content, because the underlying economics for them is better. But labels [also] don’t have enough functional content. So my whole message to UMG is ‘You can leverage generative AI today, to turn your catalog to create mass-produced, functional soundscape versions of your existing catalog. You can win back market share using generative AI today.’ ”

The prospect of reversing declining market share turned out to be irresistible even to an AI-averse company: Within two weeks of Stavitsky’s interview with Rolling Stone, Universal announced a deal with Endel to “enhance listeners’ wellness” with AI-derived soundscapes built from the company’s catalog.

IN EARLY 2023, EDM veteran David Guetta stood in front of a vast festival crowd and debuted a new song snippet, featuring an unwitting collaborator. Over thudding synth bass, Eminem’s voice blared, spitting dopey lyrics impossible to imagine from the artist who once rapped that “nobody listens to techno”: “This is the future rave sound/I’m getting awesome and underground.” The crowd loved it.

But he’s deeply excited about voice-cloning, and AI in general. “A lot of people are kind of freaking out,” Guetta says. “I just see this as another tool for us to make better records, make better demos.” He agrees with Boomy’s Mitchell that AI will continue the decades-long, computer-driven democratization of music-making. “But if you have terrible taste, your music is still gonna be terrible, even with AI,” he adds. “You can use the voice of Drake and the Weeknd, and Michael [Jackson], and Prince at the same time. If your song sucks, it’s still going to be a bad song.”

Guetta thinks AI-fueled songwriting is inevitable, especially because he sees songwriting as simply the result of musicians rearranging the corpus of music they’ve encountered in their lives. “We all do what we’ve learned,” he says. “The difference is that AI is going to be able to learn everything. So, of course, the AI is going to win in the end. One day, I hope, you’re gonna say, ‘I want to make a soul record,’ and AI will have all the soul chord progressions in history, and with the exact percentage of the ones that have been the most successful, and the key that is the most favorable for this chord progression. You cannot fight with this. It is impossible. So again, I think that more and more is going to be about taste, and not only technical abilities.”

As it happens, AI researcher Paçhet directly rejects that view. “This argument of the finiteness of the vocabulary of music was put forward by the Vienna school in the beginning of the 20th century,” he says. “In 1910, they said, ‘You know, tonal music is dead, because everything has been done.’ That was before jazz, before the Beatles, before Antônio Carlos Jobim, before everything that happened after that.”

Paçhet’s AI-music work is now in its fourth decade, most recently at Spotify, where he worked on music-making tools that may never see public release. Despite dedicating his life to the technology, he can’t help feeling disappointed so far. Like his friend Carré, what he wants from AI is something truly novel: “If you can’t say, ‘Oh, my God, how could they do that?’ you miss the point. You need new technology that creates something where people don’t understand how you could do this without some kind of magic. And I don’t think it’s the case today.”

THE CLOSEST THING to magic in AI music right now is a tool created by one of the world’s biggest tech companies. Google announced the existence of MusicLM, which attempts to do for music what ChatGPT does for text, back in January. The accompanying white paper said Google had “no plans” to release it to the public, citing, in part, concerns that one out of every 100 pieces of music it generated could be traced to copyrighted sources. (Universal has expressed alarm about the prospect of having its song catalog scraped for AI training, suggesting massive legal battles to come.) But as public interest exploded in AI this year, and Google began to look like it had fallen behind ChatGPT creator OpenAI, it began to move with less caution. In May, Google released MusicLM in a beta version, open to test users only. (OpenAI released its own music-generation tool, Jukebox, in 2020, but it only runs locally and reportedly takes 12 hours to do what MusicLM does in seconds.)

In its current state, MusicLM is astonishing and awful, sometimes both at once. (Google declined an interview on the software.) The mere fact of what it’s doing — generating fresh, high-fidelity audio files from thin air — is awe-inspiring, even when it sounds terrible. Ask it to approximate 1930s Delta blues or British Invasion rock and it will give you something fractured and bizarre, as if sourced from vinyl that’s not just warped but possibly melted on a stove. But it’s decent at generating generic trap beats, and nearly spectacular at conjuring funky blasts of retro-futuristic dance music in the vein of Daft Punk. That said, if you actually use the words Daft Punk — or any other artist name — in your prompt, it gives you an “Oops, can’t generate audio for that” message, almost certainly an attempt at copyright protection.

And then there are the voices. In its released version, MusicLM doesn’t generate prominent lead vocals, and nothing like lyrics. But on many clips, even when you don’t request them, ghostly vocals are beginning to emerge. In one case, a frontman who never lived seems to count off a nonexistent band. Other times, you can hear the whispers gathering themselves into something louder, more melodic. Somewhere deep in some distant server farm, something incalculably smart and eerily powerful is readying itself to sing.

_____

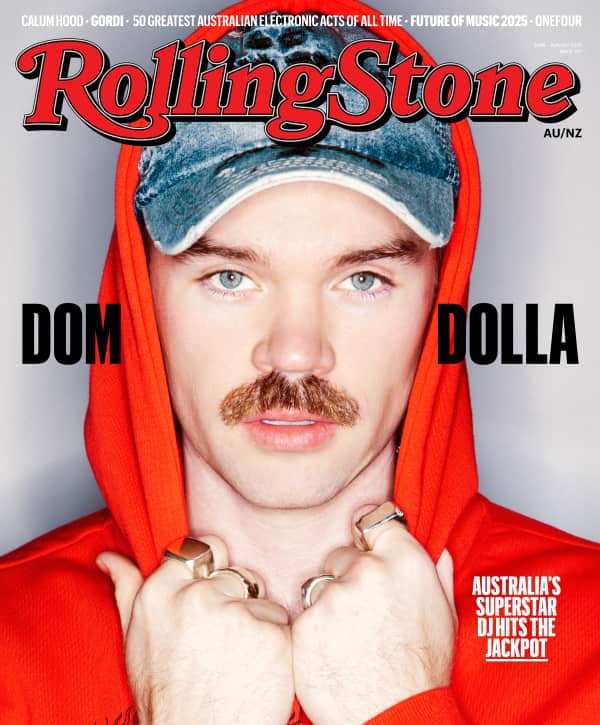

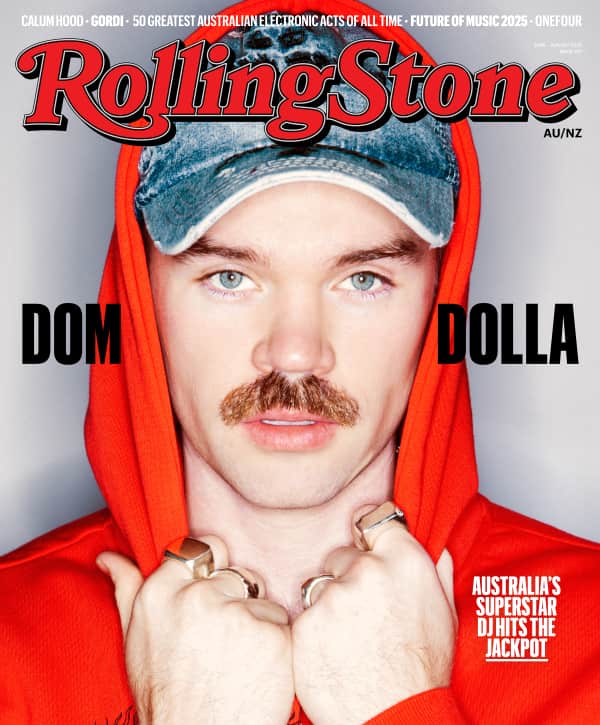

About the illustration: For this year’s Future of Music issue, “Rolling Stone” collaborated with BRIA AI — a revolutionary startup in the visual generative artificial-intelligence space that provides a fully licensed repository that compensates artists — and illustrator Tomer Hanuka. In tandem with generative AI, Hanuka designed an almost 100 percent synthetic image of Elvis Presley for “Hit Machines? The Messy Rise of AI Music.” The goal was to reimagine the King as a cyborg. “You’re praying for these happy accidents,” Hanuka says of the unusual and unpredictable process of working with a robot. But Hanuka learned to lean into the weirdness that AI came up with, like giving the cyborg Elvis three faces. As for the ethical and moral dilemma that comes with visual generative AI, a legally sound solution like BRIA AI gives Hanuka peace of mind that he’s not infringing on other artists and their work. “I don’t think [AI] is going to replace artists,” Hanuka makes clear. “I think it’s going to enhance them.” After all, it took several rounds of hand-drawn sketches and back-and-forth discussions about the desired atmosphere to create the Elvis illustration. “Creativity, visualizing, conceptualizing, and having an intent,” Hanuka says, “is still something that humans have.” —Maya Georgi

From Rolling Stone US