To the tune of “Pew Pew Pew” by Auntie Hammy, a woman in scrubs dances as the words flash on screen: “People that were never tested are added to coronavirus death toll,” and “the virus is nowhere near as deadly as experts predicted.” Another TikTok features her in a leopard-print bikini, with the caption “Google the number us6506148b2,” a reference to a so-called “mind control” patent often referred to by conspiracy theorists, that has most recently been coopted by the anti-vaccine movement to preemptively attack a COVID-19 vaccine.

These videos represent a small fraction of the COVID-19-related conspiracy theory content on the platform, some of which has racked up hundreds of thousands of views. Over the past few months, as the pandemic has claimed hundreds of thousands of lives around the globe, various social media platforms have been intensely criticized for allowing misinformation related to coronavirus to flourish. Last month, Rolling Stone reported on a viral video featuring noted anti-vaxxer and doctor Annie Bukacek, which received millions of views on YouTube and was shared widely on Facebook and Twitter despite perpetuating debunked claims about inflated COVID-19 death tolls. In May, Plandemic, a documentary featuring disgraced doctor Judy Mikovits voicing such baseless claims that wearing masks increase the risk of contracting COVID-19, went viral on Facebook and YouTube, prompting intense criticism of both platforms (which ultimately removed the video, though copies continue to be shared).

Throughout this discussion, TikTok has largely been spared scrutiny from watchdogs and regulators. In a UK meeting with lawmakers last month, representatives from most of the major social media companies spoke with lawmakers about combatting misinformation on their platforms — that is, except TikTok. That’s in part because it’s not considered a serious purveyor of news, with the public generally viewing it as a platform for viral dances. But in effect, that may make it more dangerous.

“I think there are some broader concerns about this style and rhythm of online content that lend itself to the affective charge of conspiracy theory,” says Mark Andrejevic, a professor of media studies at Monash University. “These theories don’t work well in contemplative, deliberative contexts. They make no sense. They are pure affective charge, which means they lend themselves to a quick hit, viral platform.”

Publicly, TikTok has vowed to curb misinformation on its platform. As the novel coronavirus was ravaging Wuhan in January, it revised its community guidelines to specifically prohibit misinformation “that may cause harm to an individual’s health, such as misleading information about medical treatments” as well as “misinformation meant to incite fear, hate, or prejudice.” On April 30th, TikTok’s director of trust and safety rolled out a new in-app misinformation reporting feature, which would allow users to report content that contains intentionally deceptive information; a subcategory of such content relates specifically to COVID-19.

Yet coronavirus conspiracy theories have continued to proliferate on the platform. “They have this policy no other platform has had,” says Alex Kaplan, a senior researcher for Media Matters, who focuses on misinformation. “And from what I’ve seen, they’ve really struggled to enforce it.” As Media Matters previously reported, TikTok played host to the spread of #filmyourhospital, a hashtag that has garnered more than 132,000 views encouraging people to film their local ERs, ostensibly to show how uncrowded they were, as a way to downplay the impact of COVID-19. The hashtag is still live on the platform. TikTok users have also promoted the conspiracy theory that 5G towers are linked to the spread of the novel coronavirus, including videos that appeared to document users burning down 5G towers.

Some of the most popular videos exist at the nexus of anti-vaccine and anti-government conspiracy theorist content, thanks in part to the heightened presence of Qanon accounts on the platform. One video with more than 457,000 views and 16,000 likes posits that Microsoft’s founding partnership in digital ID program ID2020 Alliance is targeted at the ultimate goal of “combining mandatory vaccines with implantable microchips,” with the hashtags #fvaccines and #billgates. Another popular conspiracy theory, among evangelicals in particular, involves the government attempting to place a chip inside unwitting subjects in the form of a vaccine. Some Christians view this as the “Mark of the Beast,” a reference to a passage in Revelations alluding to the mark of Satan. The #markofthebeast hashtag has more than 2.3 million combined views on TikTok, and some videos with the hashtag have likes in the tens of thousands.

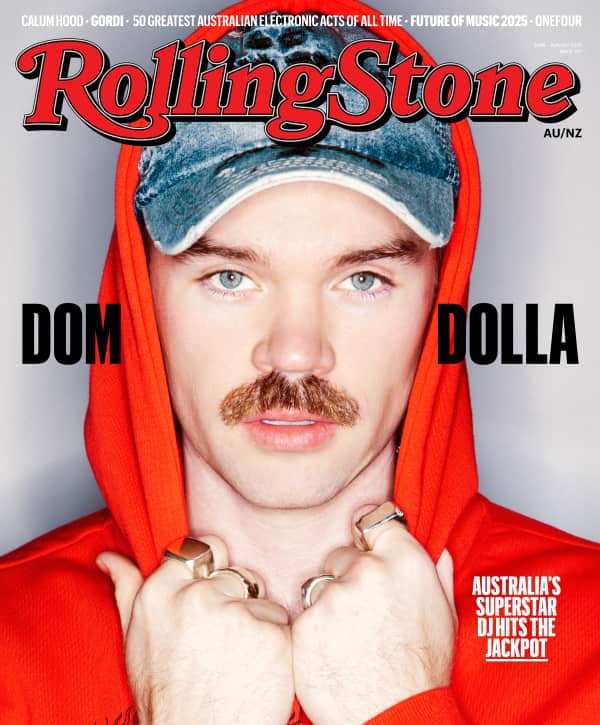

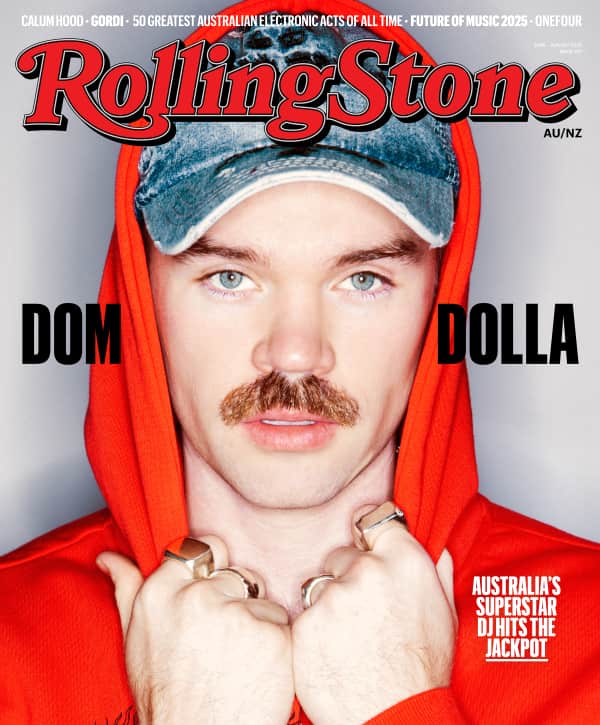

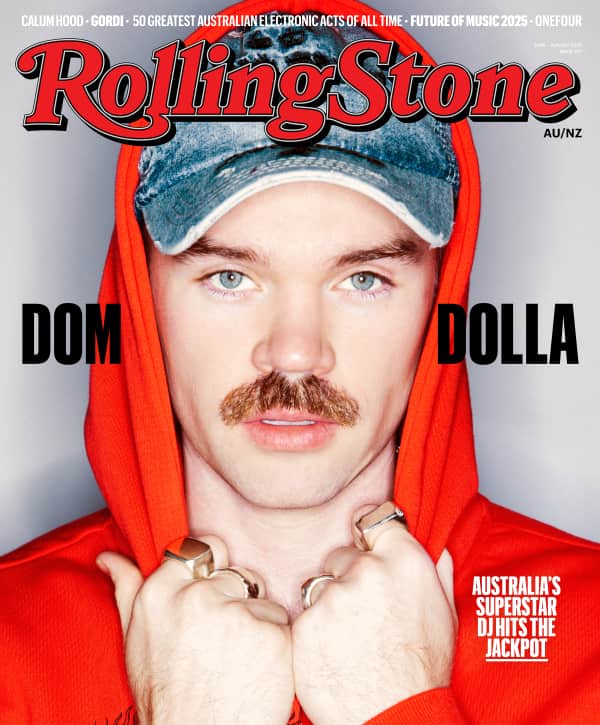

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

“Automatically, when I hear about the Mark of the Beast, I think about the coronavirus and the vaccine that’s gonna come out, ’cause if you really think about it, you don’t know what the fuck the vaccine’s gonna have in it,” one TikTok user says in a video from last month with more than 66,000 views, before declaring he’d rather die than get a vaccine. The video is one of the first few that shows up when you search for the #vaccine hashtag, which has 42 million views.

Another TikTok with more than 285,000 views is among the first 20 results when you look up the hashtag #billgates. It promotes the idea that the COVID-19 pandemic is a front to adopt a cryptocurrency system patented by Microsoft (headed by conspiracy theorist-favorite Bill Gates) in order to institute governmental mind control. As evidence, the TikTok cites the number of the patent, which contains three sixes. Another user who frequently shares conspiracy theorist content recently posted a TikTok to the tune of Megan thee Stallion’s “Savage”: “Bill Gates wants to chip us, track us and vax us/can’t convince us he’s not an assassin,” concluding with the admonition, “don’t vax.”

None of these posts feature a link to authoritative COVID-19 content, which the platform started adding to coronavirus-related content in partnership with the World Health Organization (WHO) back in March. In a statement, a TikTok spokesperson said: “We are committed to promoting a safe app environment where users can express their creativity. While we encourage users to have respectful conversations about the topics that matter to them, we remove reported content that is intended to deceive or mislead our community or the larger public.” They did not address questions about the individual videos Rolling Stone found that promote misinformation and whether they had been taken down, or why hashtags like #markofthebeast and #filmyourhospital are still on the app.

Of course, for an app of TikTok’s size and with its global reach, it’s inevitable that bad actors would use the platform to sow misinformation; because the pandemic has presented a golden opportunity for conspiracy theorists to gain mainstream visibility, it makes sense that some content would fall in between the cracks. Yet the conspiracy-oriented content on TikTok — whose app surpassed 2 billion downloads last month — does not exist on the margins, nor is it particularly difficult to find, despite TikTok’s asserted efforts to combat misinformation. And considering TikTok’s algorithm notoriously surfaces content that users have proven most likely to engage with, it also presents an opportunity for users to fall down the rabbit hole. “It’s YouTube on crack: a quicker, shorter fix, in keeping with the accelerated rhythm of non-stop online, mobile entertainment,” says Andrejevic.

And it is doing so without garnering much attention from watchdog groups intent on curbing such misinformation, in part due to the app’s reputation as a harmless Gen Z diversion. “If you’re going to have these policies, you have to live up to what they suggest,” says Kaplan. “Because this can all be harmful to the public health and endanger lives at the end of the day.”